Finite State Machine Policies Modulating Trajectory Generator

Paper and Code

Sep 26, 2021

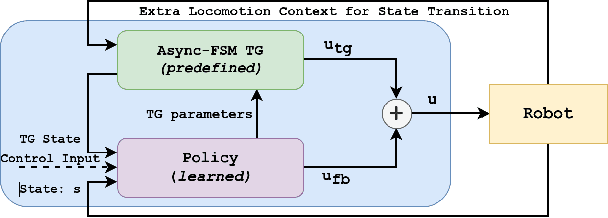

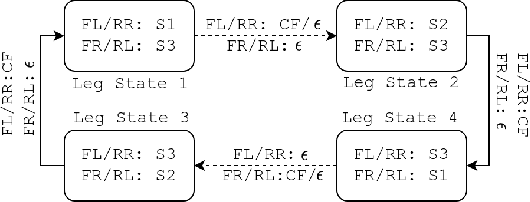

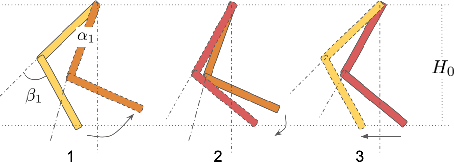

Deep reinforcement learning (deep RL) has emerged as an effective tool for developing controllers for legged robots. However, a simple neural network representation is known for its poor extrapolation ability, making the learned behavior vulnerable to unseen perturbations or challenging terrains. Therefore, researchers have investigated a novel architecture, Policies Modulating Trajectory Generators (PMTG), which combines trajectory generators (TG) and feedback control signals to achieve more robust behaviors. In this work, we propose to extend the PMTG framework with a finite state machine PMTG by replacing simple TGs with asynchronous finite state machines (Async FSMs). This invention offers an explicit notion of contact events to the policy to negotiate unexpected perturbations. We demonstrated that the proposed architecture could achieve more robust behaviors in various scenarios, such as challenging terrains or external perturbations, on both simulated and real robots. The supplemental video can be found at: http://youtu.be/XUiTSZaM8f0.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge