Fine-Grained Data Selection for Improved Energy Efficiency of Federated Edge Learning

Paper and Code

Jun 20, 2021

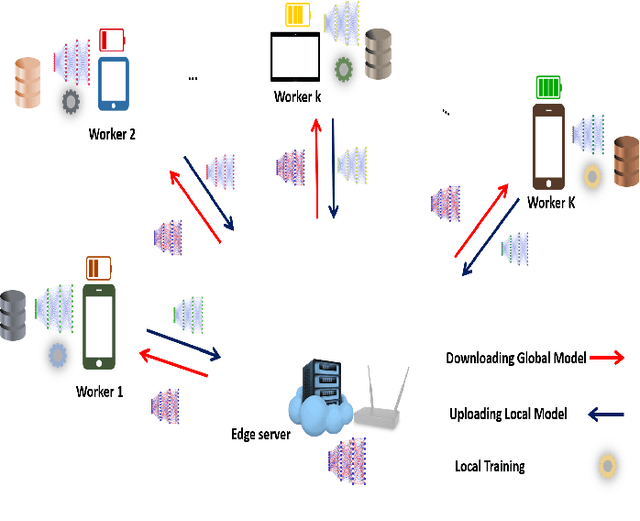

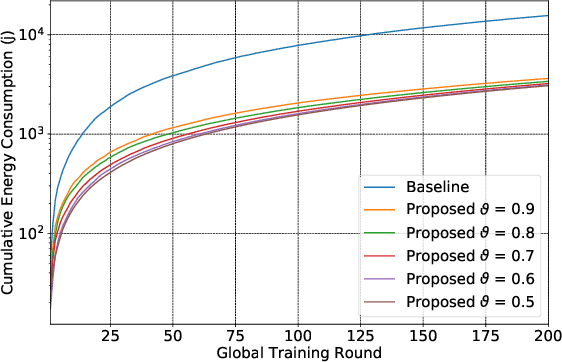

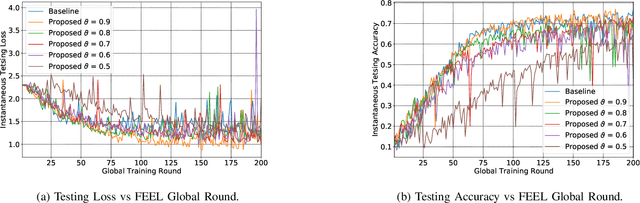

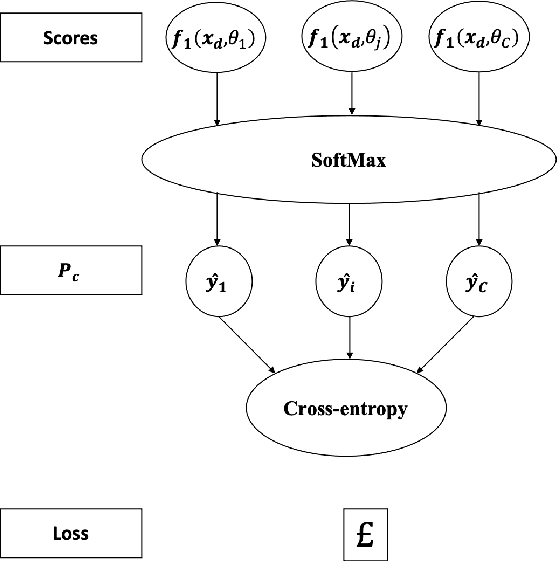

In Federated edge learning (FEEL), energy-constrained devices at the network edge consume significant energy when training and uploading their local machine learning models, leading to a decrease in their lifetime. This work proposes novel solutions for energy-efficient FEEL by jointly considering local training data, available computation, and communications resources, and deadline constraints of FEEL rounds to reduce energy consumption. This paper considers a system model where the edge server is equipped with multiple antennas employing beamforming techniques to communicate with the local users through orthogonal channels. Specifically, we consider a problem that aims to find the optimal user's resources, including the fine-grained selection of relevant training samples, bandwidth, transmission power, beamforming weights, and processing speed with the goal of minimizing the total energy consumption given a deadline constraint on the communication rounds of FEEL. Then, we devise tractable solutions by first proposing a novel fine-grained training algorithm that excludes less relevant training samples and effectively chooses only the samples that improve the model's performance. After that, we derive closed-form solutions, followed by a Golden-Section-based iterative algorithm to find the optimal computation and communication resources that minimize energy consumption. Experiments using MNIST and CIFAR-10 datasets demonstrate that our proposed algorithms considerably outperform the state-of-the-art solutions as energy consumption decreases by 79% for MNIST and 73% for CIFAR-10 datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge