FIAS: Feature Imbalance-Aware Medical Image Segmentation with Dynamic Fusion and Mixing Attention

Paper and Code

Nov 16, 2024

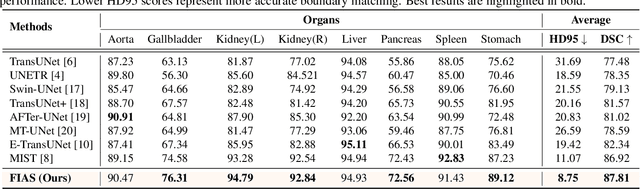

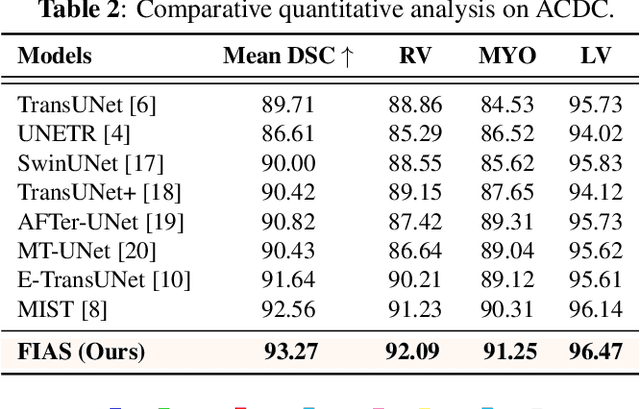

With the growing application of transformer in computer vision, hybrid architecture that combine convolutional neural networks (CNNs) and transformers demonstrates competitive ability in medical image segmentation. However, direct fusion of features from CNNs and transformers often leads to feature imbalance and redundant information. To address these issues, we propose a Feaure Imbalance-Aware Segmentation (FIAS) network, which incorporates a dual-path encoder and a novel Mixing Attention (MixAtt) decoder. The dual-branches encoder integrates a DilateFormer for long-range global feature extraction and a Depthwise Multi-Kernel (DMK) convolution for capturing fine-grained local details. A Context-Aware Fusion (CAF) block dynamically balances the contribution of these global and local features, preventing feature imbalance. The MixAtt decoder further enhances segmentation accuracy by combining self-attention and Monte Carlo attention, enabling the model to capture both small details and large-scale dependencies. Experimental results on the Synapse multi-organ and ACDC datasets demonstrate the strong competitiveness of our approach in medical image segmentation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge