FedMVT: Semi-supervised Vertical Federated Learning with MultiView Training

Paper and Code

Aug 25, 2020

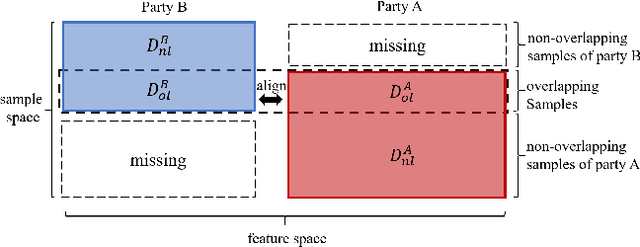

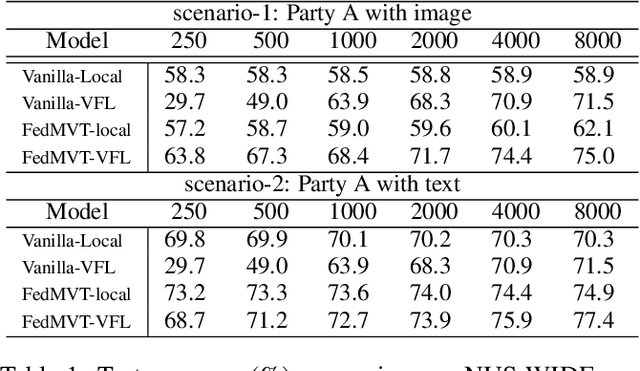

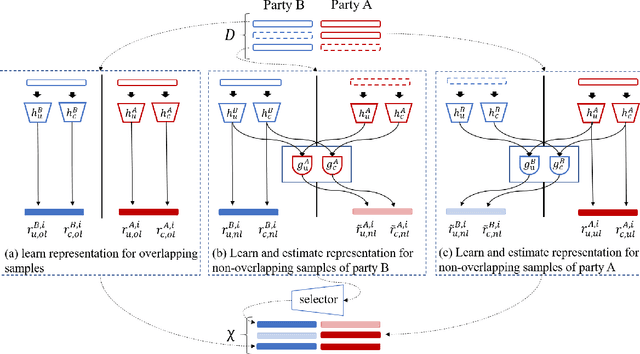

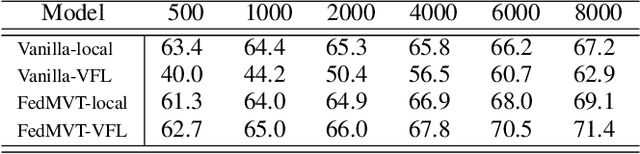

Federated learning allows many parties to collaboratively build a model without exposing data. Particularly, vertical federated learning (VFL) enables parties to build a robust shared machine learning model based upon distributed features about the same samples. However, VFL requires all parties to share a sufficient amount of overlapping samples. In reality, the set of overlapping samples may be small, leaving the majority of the non-overlapping data unutilized. In this paper, we propose Federated Multi-View Training (FedMVT), a semi-supervised learning approach that improves the performance of VFL with limited overlapping samples. FedMVT estimates representations for missing features and predicts pseudo-labels for unlabeled samples to expand training set, and trains three classifiers jointly based upon different views of the input to improve model's representation learning. FedMVT does not require parties to share their original data and model parameters, thus preserving data privacy. We conduct experiments on the NUS-WIDE and the CIFAR10. The experimental results demonstrate that FedMVT significantly outperforms vanilla VFL that only utilizes overlapping samples, and improves the performance of the local model in the party that owns labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge