FEDIC: Federated Learning on Non-IID and Long-Tailed Data via Calibrated Distillation

Paper and Code

Apr 30, 2022

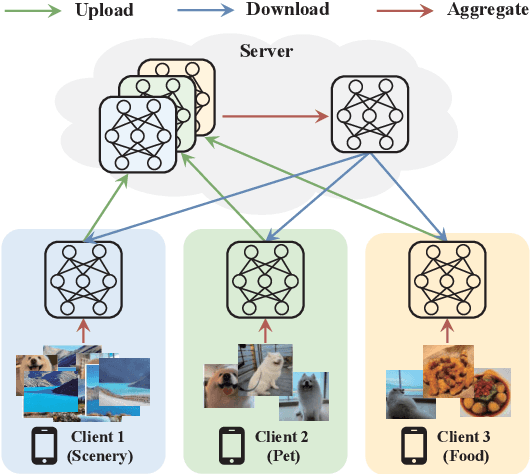

Federated learning provides a privacy guarantee for generating good deep learning models on distributed clients with different kinds of data. Nevertheless, dealing with non-IID data is one of the most challenging problems for federated learning. Researchers have proposed a variety of methods to eliminate the negative influence of non-IIDness. However, they only focus on the non-IID data provided that the universal class distribution is balanced. In many real-world applications, the universal class distribution is long-tailed, which causes the model seriously biased. Therefore, this paper studies the joint problem of non-IID and long-tailed data in federated learning and proposes a corresponding solution called Federated Ensemble Distillation with Imbalance Calibration (FEDIC). To deal with non-IID data, FEDIC uses model ensemble to take advantage of the diversity of models trained on non-IID data. Then, a new distillation method with logit adjustment and calibration gating network is proposed to solve the long-tail problem effectively. We evaluate FEDIC on CIFAR-10-LT, CIFAR-100-LT, and ImageNet-LT with a highly non-IID experimental setting, in comparison with the state-of-the-art methods of federated learning and long-tail learning. Our code is available at https://github.com/shangxinyi/FEDIC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge