Federated Temporal Graph Clustering

Paper and Code

Oct 16, 2024

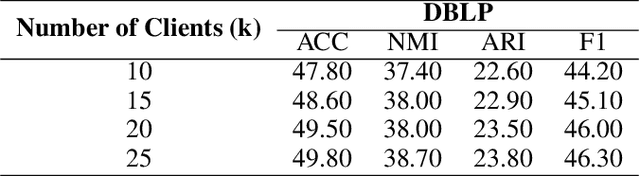

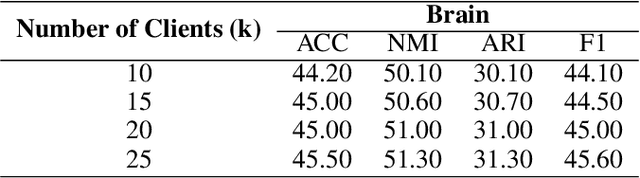

Temporal graph clustering is a complex task that involves discovering meaningful structures in dynamic graphs where relationships and entities change over time. Existing methods typically require centralized data collection, which poses significant privacy and communication challenges. In this work, we introduce a novel Federated Temporal Graph Clustering (FTGC) framework that enables decentralized training of graph neural networks (GNNs) across multiple clients, ensuring data privacy throughout the process. Our approach incorporates a temporal aggregation mechanism to effectively capture the evolution of graph structures over time and a federated optimization strategy to collaboratively learn high-quality clustering representations. By preserving data privacy and reducing communication overhead, our framework achieves competitive performance on temporal graph datasets, making it a promising solution for privacy-sensitive, real-world applications involving dynamic data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge