Federated Face Anti-spoofing

Paper and Code

May 29, 2020

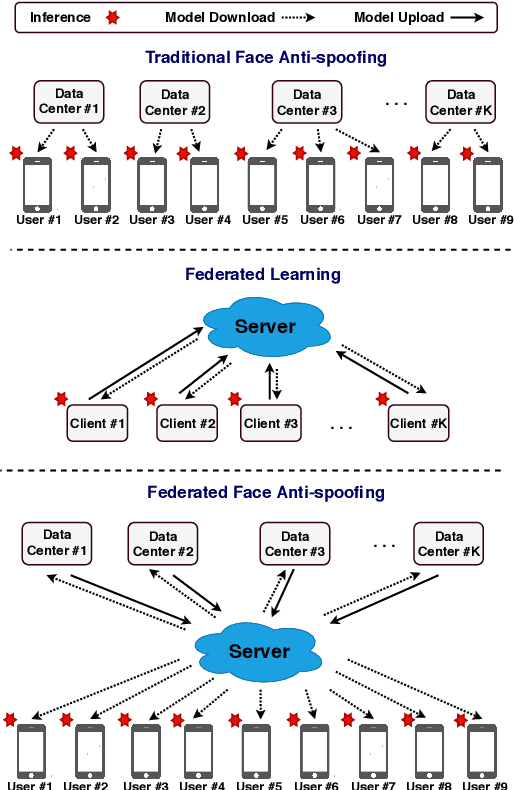

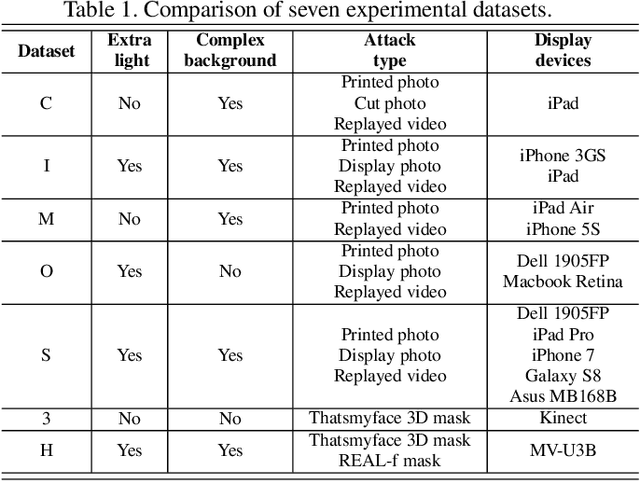

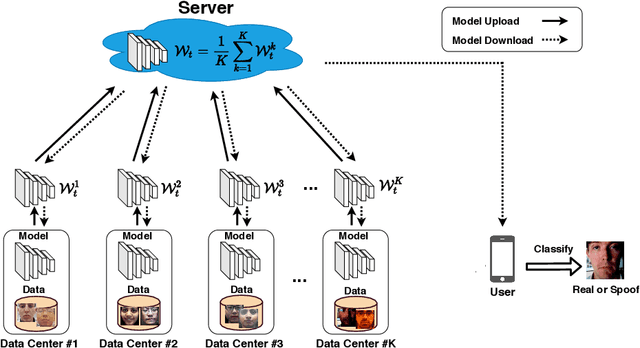

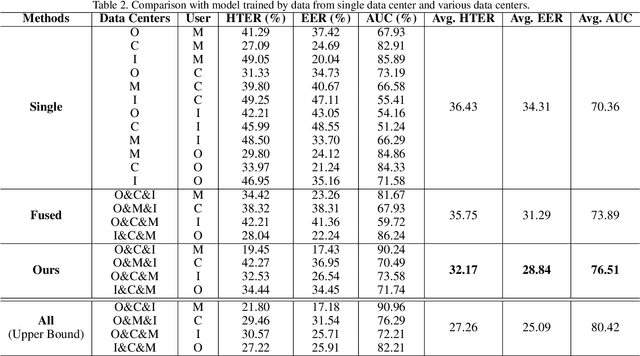

Face presentation attack detection plays a critical role in the modern face recognition pipeline. A face anti-spoofing (FAS) model with good generalization can be obtained when it is trained with face images from different input distributions and different types of spoof attacks. In reality, training data (both real face images and spoof images) are not directly shared between data owners due to legal and privacy issues. In this paper, with the motivation of circumventing this challenge, we propose Federated Face Anti-spoofing (FedFAS) framework. FedFAS simultaneously takes advantage of rich FAS information available at different data owners while preserving data privacy. In the proposed framework, each data owner (referred to as \textit{data centers}) locally trains its own FAS model. A server learns a global FAS model by iteratively aggregating model updates from all data centers without accessing private data in each of them. Once the learned global model converges, it is used for FAS inference. We introduce the experimental setting to evaluate the proposed FedFAS framework and carry out extensive experiments to provide various insights about federated learning for FAS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge