FedDW: Distilling Weights through Consistency Optimization in Heterogeneous Federated Learning

Paper and Code

Dec 05, 2024

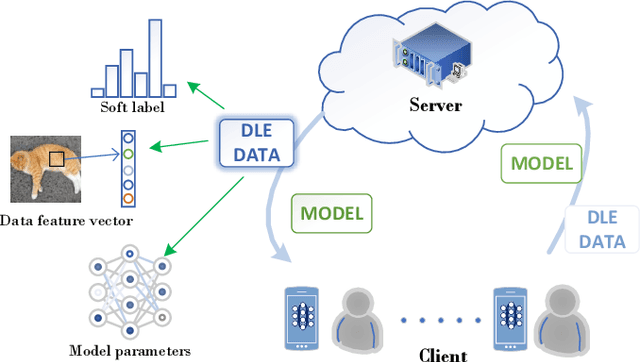

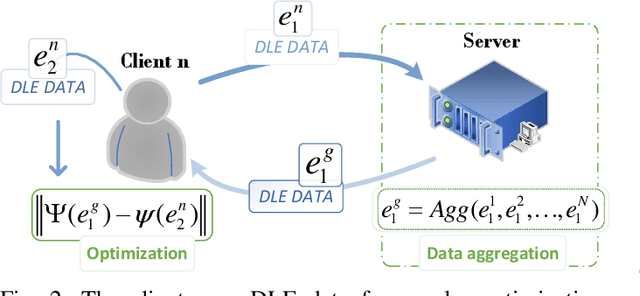

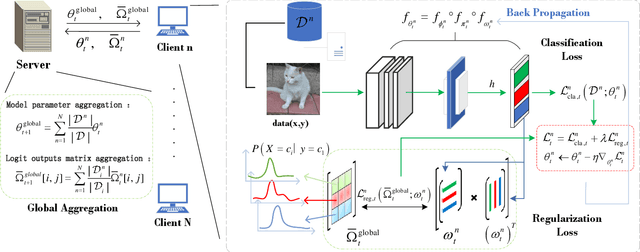

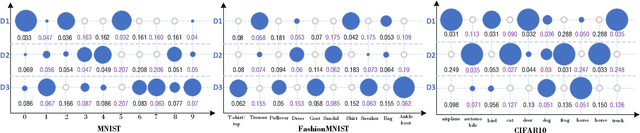

Federated Learning (FL) is an innovative distributed machine learning paradigm that enables neural network training across devices without centralizing data. While this addresses issues of information sharing and data privacy, challenges arise from data heterogeneity across clients and increasing network scale, leading to impacts on model performance and training efficiency. Previous research shows that in IID environments, the parameter structure of the model is expected to adhere to certain specific consistency principles. Thus, identifying and regularizing these consistencies can mitigate issues from heterogeneous data. We found that both soft labels derived from knowledge distillation and the classifier head parameter matrix, when multiplied by their own transpose, capture the intrinsic relationships between data classes. These shared relationships suggest inherent consistency. Therefore, the work in this paper identifies the consistency between the two and leverages it to regulate training, underpinning our proposed FedDW framework. Experimental results show FedDW outperforms 10 state-of-the-art FL methods, improving accuracy by an average of 3% in highly heterogeneous settings. Additionally, we provide a theoretical proof that FedDW offers higher efficiency, with the additional computational load from backpropagation being negligible. The code is available at https://github.com/liuvvvvv1/FedDW.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge