Feature robustness and sex differences in medical imaging: a case study in MRI-based Alzheimer's disease detection

Paper and Code

Apr 12, 2022

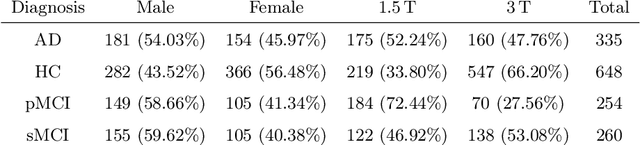

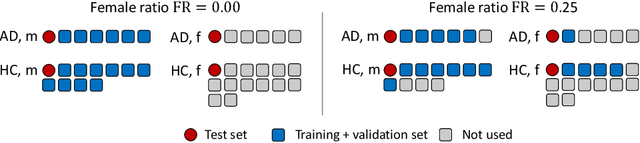

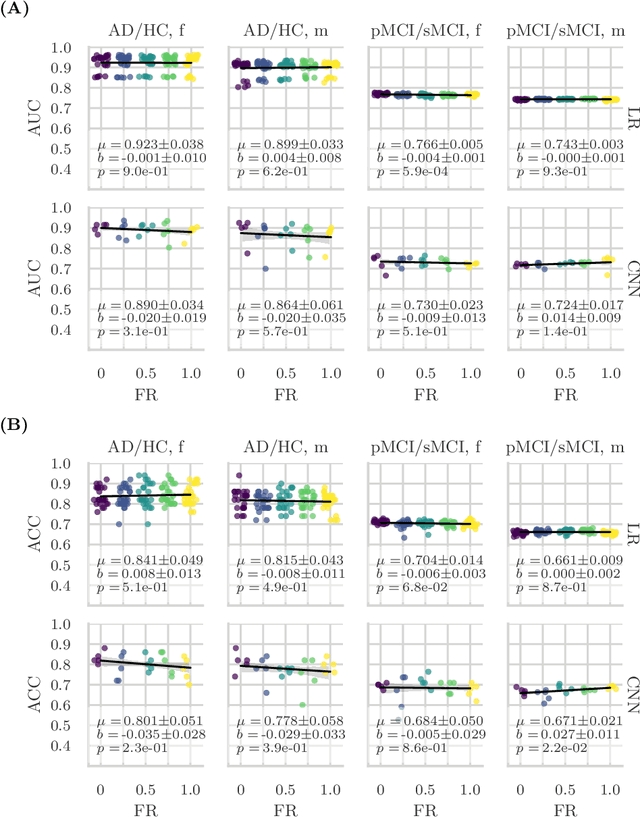

Convolutional neural networks have enabled significant improvements in medical image-based disease classification. It has, however, become increasingly clear that these models are susceptible to performance degradation due to spurious correlations and dataset shifts, which may lead to underperformance on underrepresented patient groups, among other problems. In this paper, we compare two classification schemes on the ADNI MRI dataset: a very simple logistic regression model that uses manually selected volumetric features as inputs, and a convolutional neural network trained on 3D MRI data. We assess the robustness of the trained models in the face of varying dataset splits, training set sex composition, and stage of disease. In contrast to earlier work on diagnosing lung diseases based on chest x-ray data, we do not find a strong dependence of model performance for male and female test subjects on the sex composition of the training dataset. Moreover, in our analysis, the low-dimensional model with manually selected features outperforms the 3D CNN, thus emphasizing the need for automatic robust feature extraction methods and the value of manual feature specification (based on prior knowledge) for robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge