Fast Point Voxel Convolution Neural Network with Selective Feature Fusion for Point Cloud Semantic Segmentation

Paper and Code

Sep 23, 2021

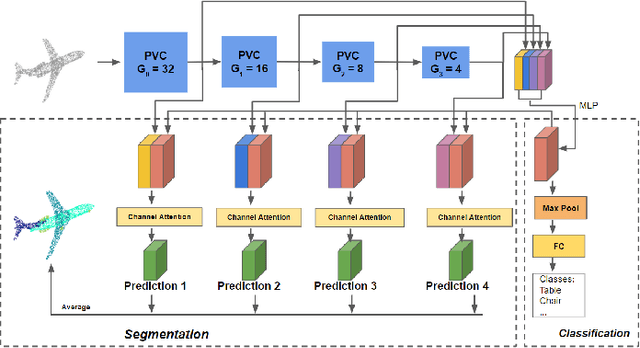

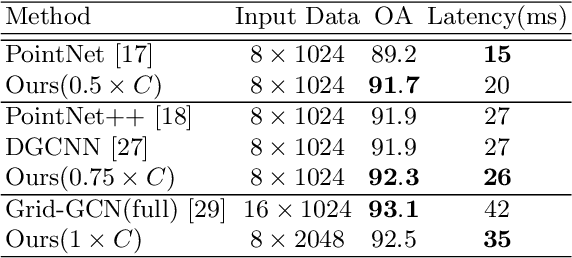

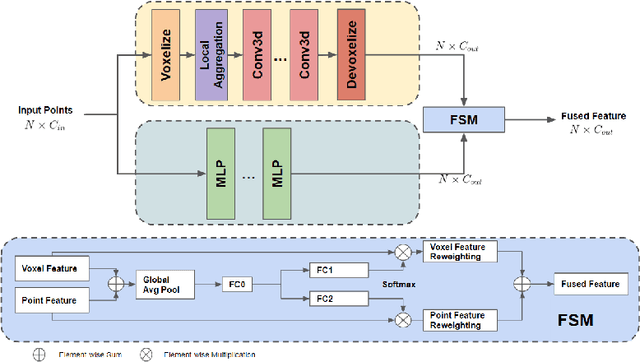

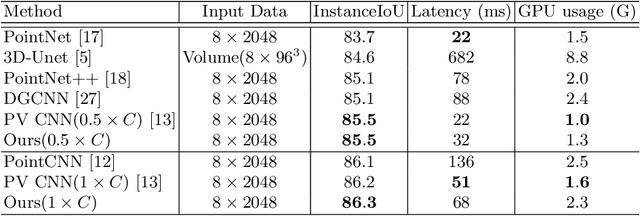

We present a novel lightweight convolutional neural network for point cloud analysis. In contrast to many current CNNs which increase receptive field by downsampling point cloud, our method directly operates on the entire point sets without sampling and achieves good performances efficiently. Our network consists of point voxel convolution (PVC) layer as building block. Each layer has two parallel branches, namely the voxel branch and the point branch. For the voxel branch specifically, we aggregate local features on non-empty voxel centers to reduce geometric information loss caused by voxelization, then apply volumetric convolutions to enhance local neighborhood geometry encoding. For the point branch, we use Multi-Layer Perceptron (MLP) to extract fine-detailed point-wise features. Outputs from these two branches are adaptively fused via a feature selection module. Moreover, we supervise the output from every PVC layer to learn different levels of semantic information. The final prediction is made by averaging all intermediate predictions. We demonstrate empirically that our method is able to achieve comparable results while being fast and memory efficient. We evaluate our method on popular point cloud datasets for object classification and semantic segmentation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge