Fast Direct Stereo Visual SLAM

Paper and Code

Dec 03, 2021

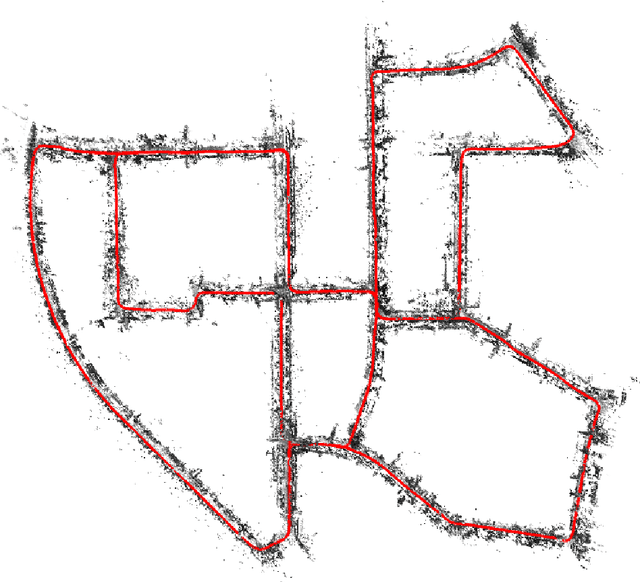

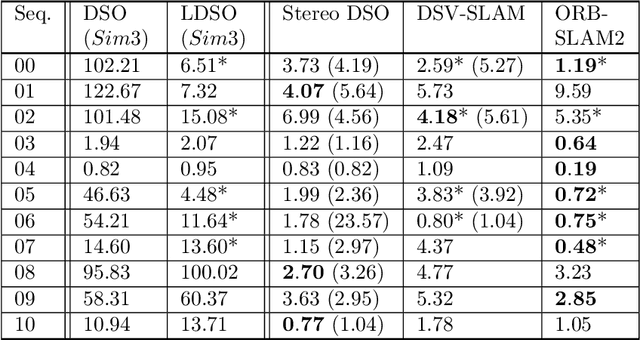

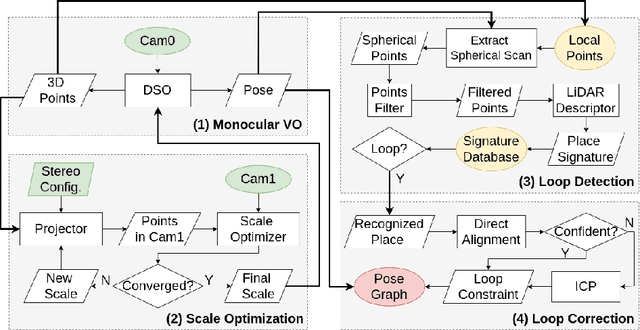

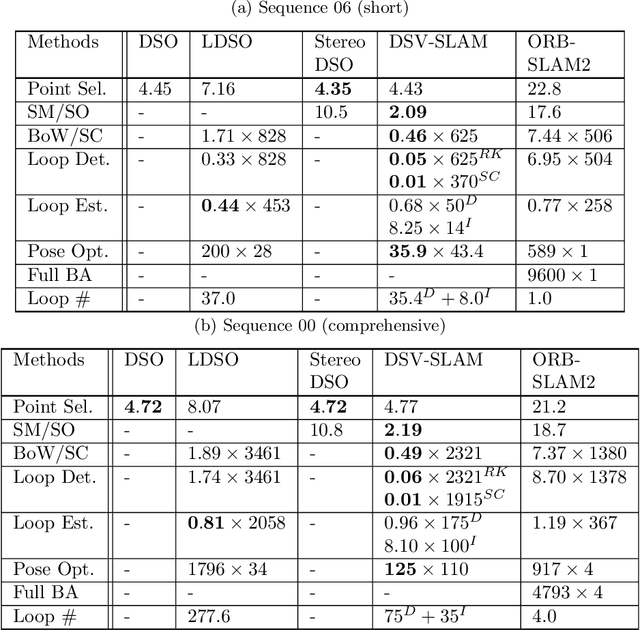

We propose a novel approach for fast and accurate stereo visual Simultaneous Localization and Mapping (SLAM) independent of feature detection and matching. We extend monocular Direct Sparse Odometry (DSO) to a stereo system by optimizing the scale of the 3D points to minimize photometric error for the stereo configuration, which yields a computationally efficient and robust method compared to conventional stereo matching. We further extend it to a full SLAM system with loop closure to reduce accumulated errors. With the assumption of forward camera motion, we imitate a LiDAR scan using the 3D points obtained from the visual odometry and adapt a LiDAR descriptor for place recognition to facilitate more efficient detection of loop closures. Afterward, we estimate the relative pose using direct alignment by minimizing the photometric error for potential loop closures. Optionally, further improvement over direct alignment is achieved by using the Iterative Closest Point (ICP) algorithm. Lastly, we optimize a pose graph to improve SLAM accuracy globally. By avoiding feature detection or matching in our SLAM system, we ensure high computational efficiency and robustness. Thorough experimental validations on public datasets demonstrate its effectiveness compared to the state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge