Fairness in Algorithmic Profiling: A German Case Study

Paper and Code

Aug 04, 2021

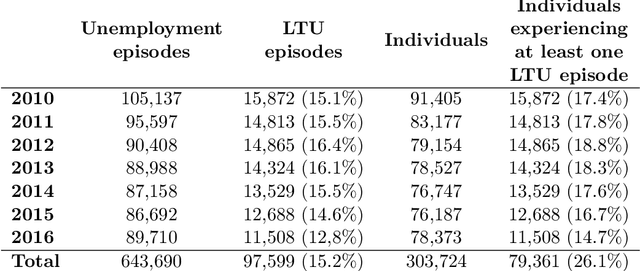

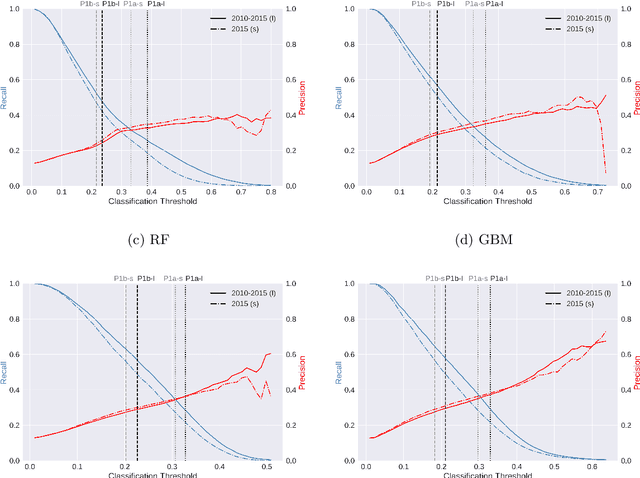

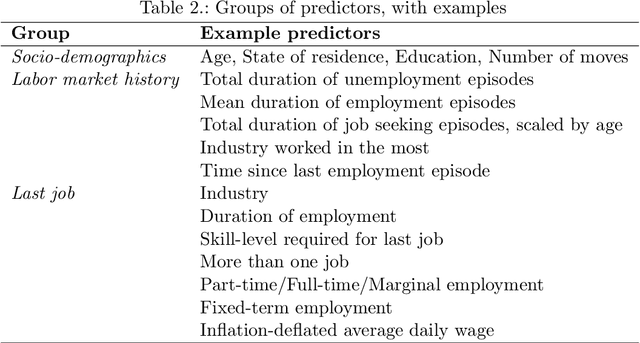

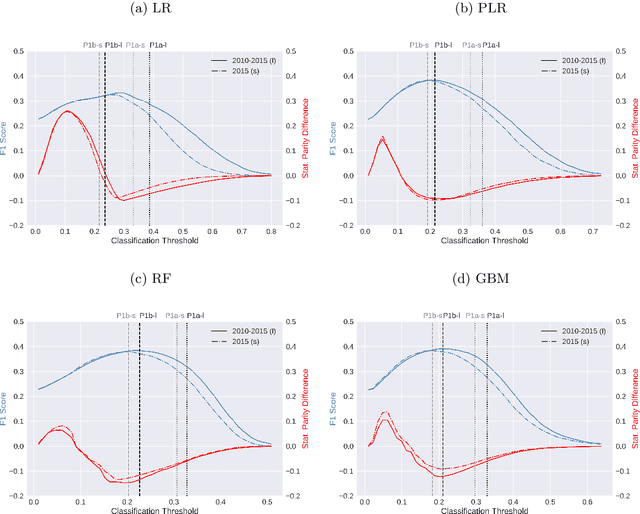

Algorithmic profiling is increasingly used in the public sector as a means to allocate limited public resources effectively and objectively. One example is the prediction-based statistical profiling of job seekers to guide the allocation of support measures by public employment services. However, empirical evaluations of potential side-effects such as unintended discrimination and fairness concerns are rare. In this study, we compare and evaluate statistical models for predicting job seekers' risk of becoming long-term unemployed with respect to prediction performance, fairness metrics, and vulnerabilities to data analysis decisions. Focusing on Germany as a use case, we evaluate profiling models under realistic conditions by utilizing administrative data on job seekers' employment histories that are routinely collected by German public employment services. Besides showing that these data can be used to predict long-term unemployment with competitive levels of accuracy, we highlight that different classification policies have very different fairness implications. We therefore call for rigorous auditing processes before such models are put to practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge