Fair and Optimal Classification via Transports to Wasserstein-Barycenter

Paper and Code

Nov 03, 2022

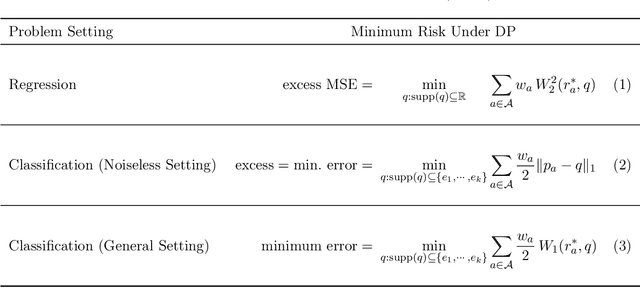

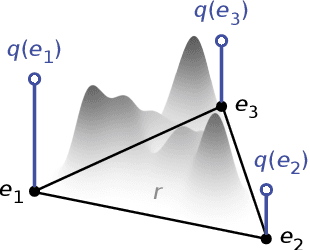

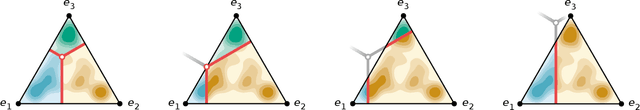

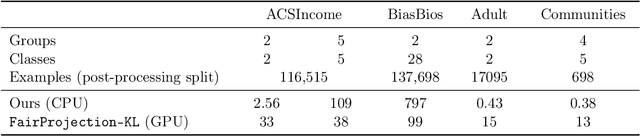

Fairness in automated decision-making systems has gained increasing attention as their applications expand to real-world high-stakes domains. To facilitate the design of fair ML systems, it is essential to understand the potential trade-offs between fairness and predictive power, and the construction of the optimal predictor under a given fairness constraint. In this paper, for general classification problems under the group fairness criterion of demographic parity (DP), we precisely characterize the trade-off between DP and classification accuracy, referred to as the minimum cost of fairness. Our insight comes from the key observation that finding the optimal fair classifier is equivalent to solving a Wasserstein-barycenter problem under $\ell_1$-norm restricted to the vertices of the probability simplex. Inspired by our characterization, we provide a construction of an optimal fair classifier achieving this minimum cost via the composition of the Bayes regressor and optimal transports from its output distributions to the barycenter. Our construction naturally leads to an algorithm for post-processing any pre-trained predictor to satisfy DP fairness, complemented with finite sample guarantees. Experiments on real-world datasets verify and demonstrate the effectiveness of our approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge