Failure-informed adaptive sampling for PINNs

Paper and Code

Oct 09, 2022

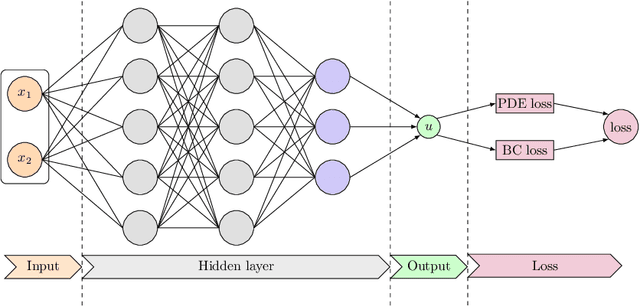

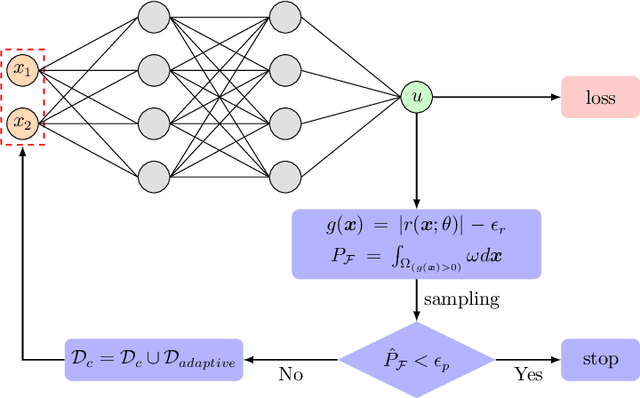

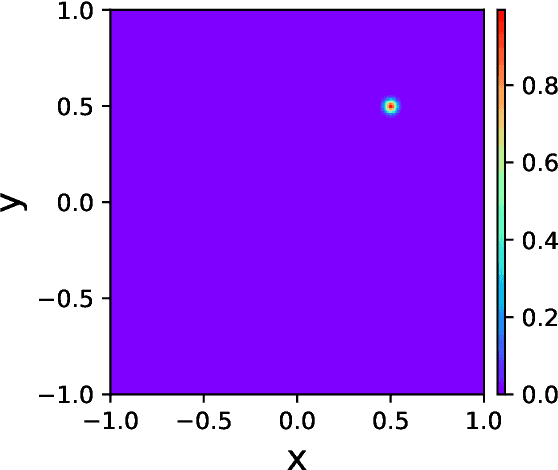

Physics-informed neural networks (PINNs) have emerged as an effective technique for solving PDEs in a wide range of domains. It is noticed, however, the performance of PINNs can vary dramatically with different sampling procedures. For instance, a fixed set of (prior chosen) training points may fail to capture the effective solution region (especially for problems with singularities). To overcome this issue, we present in this work an adaptive strategy, termed the failure-informed PINNs (FI-PINNs), which is inspired by the viewpoint of reliability analysis. The key idea is to define an effective failure probability based on the residual, and then, with the aim of placing more samples in the failure region, the FI-PINNs employs a failure-informed enrichment technique to adaptively add new collocation points to the training set, such that the numerical accuracy is dramatically improved. In short, similar as adaptive finite element methods, the proposed FI-PINNs adopts the failure probability as the posterior error indicator to generate new training points. We prove rigorous error bounds of FI-PINNs and illustrate its performance through several problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge