Extreme Learning Machine with Local Connections

Paper and Code

Jan 22, 2018

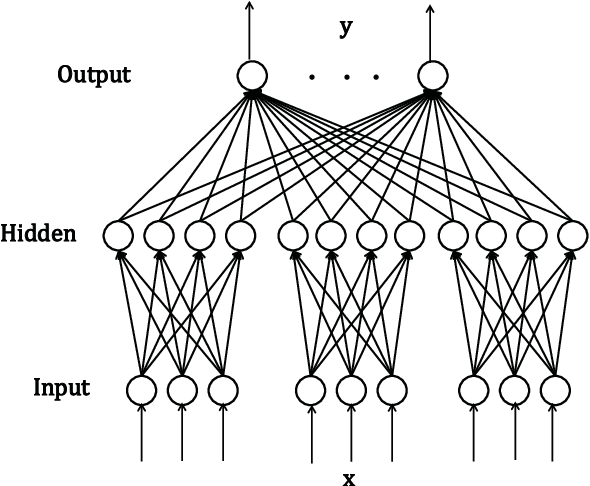

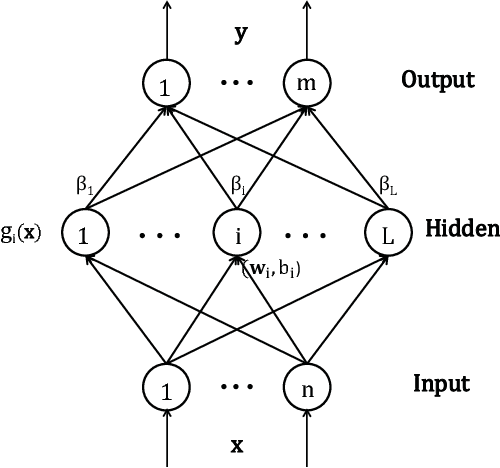

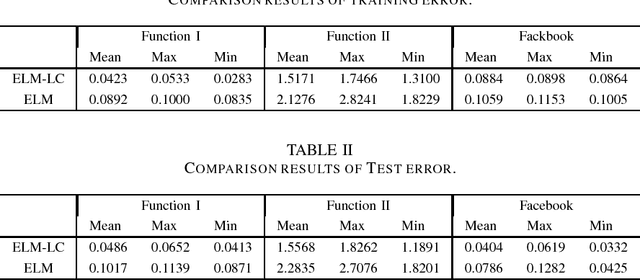

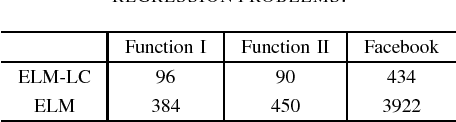

This paper is concerned with the sparsification of the input-hidden weights of ELM (Extreme Learning Machine). For ordinary feedforward neural networks, the sparsification is usually done by introducing certain regularization technique into the learning process of the network. But this strategy can not be applied for ELM, since the input-hidden weights of ELM are supposed to be randomly chosen rather than to be learned. To this end, we propose a modified ELM, called ELM-LC (ELM with local connections), which is designed for the sparsification of the input-hidden weights as follows: The hidden nodes and the input nodes are divided respectively into several corresponding groups, and an input node group is fully connected with its corresponding hidden node group, but is not connected with any other hidden node group. As in the usual ELM, the hidden-input weights are randomly given, and the hidden-output weights are obtained through a least square learning. In the numerical simulations on some benchmark problems, the new ELM-CL behaves better than the traditional ELM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge