Exponential Lower Bounds for Planning in MDPs With Linearly-Realizable Optimal Action-Value Functions

Paper and Code

Oct 03, 2020

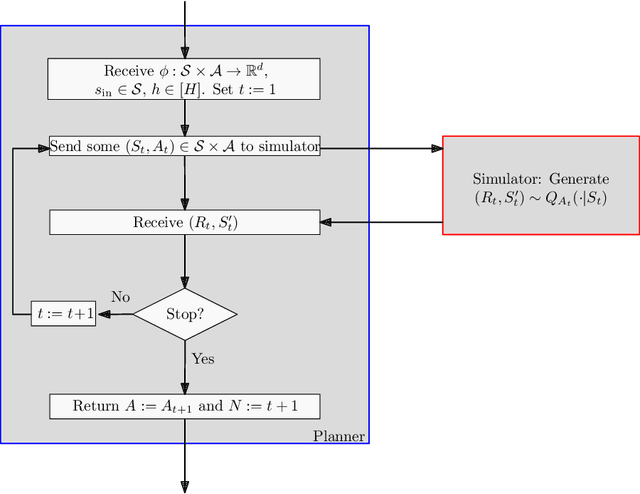

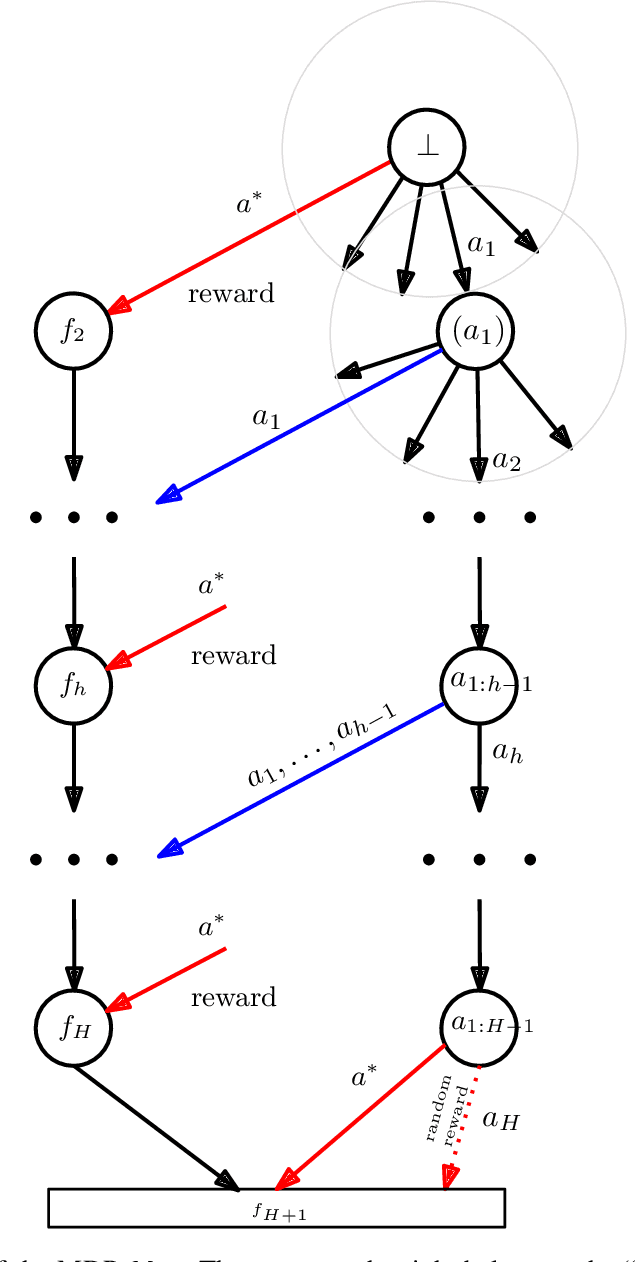

We consider the problem of local planning in fixed-horizon Markov Decision Processes (MDPs) with linear function approximation and a generative model under the assumption that the optimal action-value function lies in the span of a feature map that is available to the planner. Previous work has left open the question of whether there exists sound planners that need only poly(H, d) queries regardless of the MDP, where H is the horizon and d is the dimensionality of the features. We answer this question in the negative: we show that any sound planner must query at least min(exp({\Omega}(d)), {\Omega}(2^H)) samples. We also show that for any {\delta}>0, the least-squares value iteration algorithm with O(H^5d^(H+1)/{\delta}^2) queries can compute a {\delta}-optimal policy. We discuss implications and remaining open questions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge