Exploring Generative Adversarial Networks for Text-to-Image Generation with Evolution Strategies

Paper and Code

Jul 06, 2022

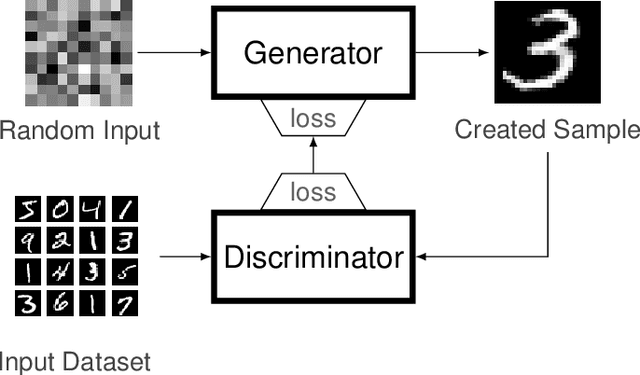

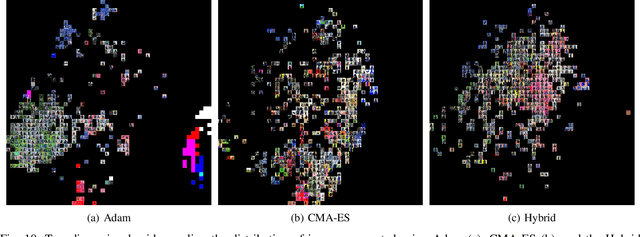

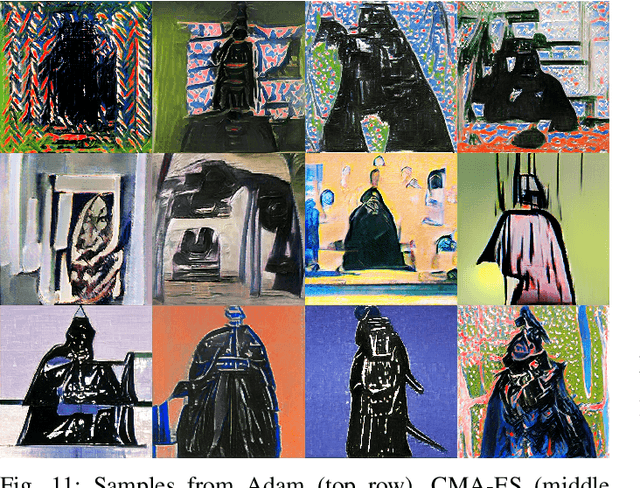

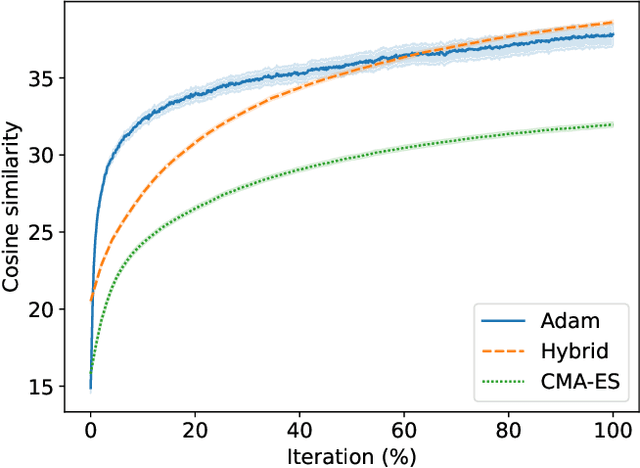

In the context of generative models, text-to-image generation achieved impressive results in recent years. Models using different approaches were proposed and trained in huge datasets of pairs of texts and images. However, some methods rely on pre-trained models such as Generative Adversarial Networks, searching through the latent space of the generative model by using a gradient-based approach to update the latent vector, relying on loss functions such as the cosine similarity. In this work, we follow a different direction by proposing the use of Covariance Matrix Adaptation Evolution Strategy to explore the latent space of Generative Adversarial Networks. We compare this approach to the one using Adam and a hybrid strategy. We design an experimental study to compare the three approaches using different text inputs for image generation by adapting an evaluation method based on the projection of the resulting samples into a two-dimensional grid to inspect the diversity of the distributions. The results evidence that the evolutionary method achieves more diversity in the generation of samples, exploring different regions of the resulting grids. Besides, we show that the hybrid method combines the explored areas of the gradient-based and evolutionary approaches, leveraging the quality of the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge