Exploiting Prior Knowledge in Preferential Learning of Individualized Autonomous Vehicle Driving Styles

Paper and Code

Mar 19, 2025

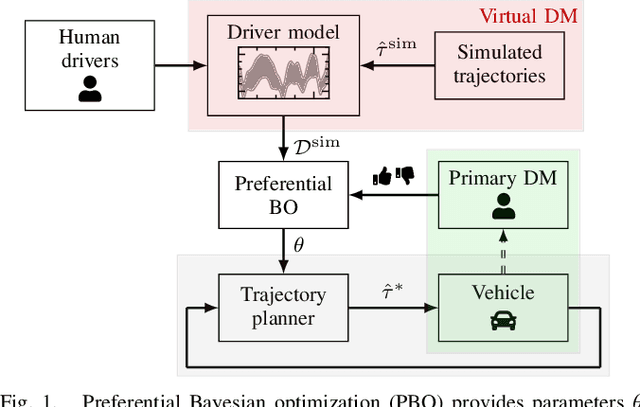

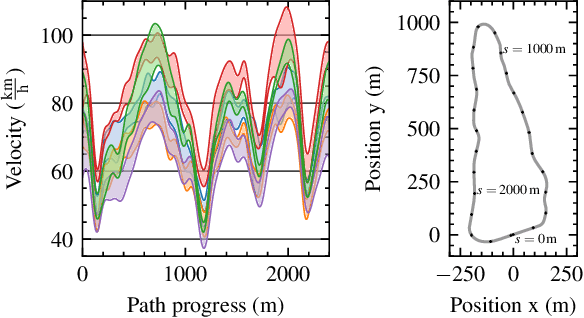

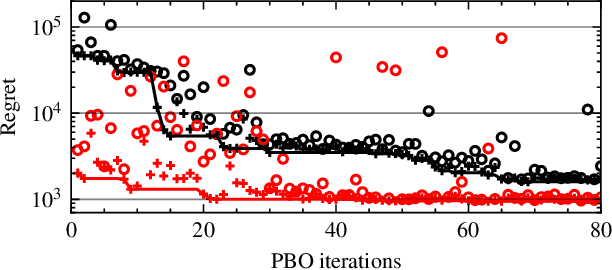

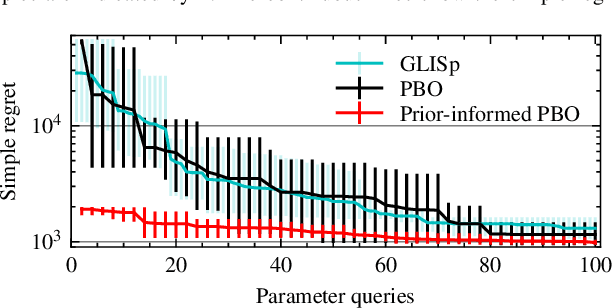

Trajectory planning for automated vehicles commonly employs optimization over a moving horizon - Model Predictive Control - where the cost function critically influences the resulting driving style. However, finding a suitable cost function that results in a driving style preferred by passengers remains an ongoing challenge. We employ preferential Bayesian optimization to learn the cost function by iteratively querying a passenger's preference. Due to increasing dimensionality of the parameter space, preference learning approaches might struggle to find a suitable optimum with a limited number of experiments and expose the passenger to discomfort when exploring the parameter space. We address these challenges by incorporating prior knowledge into the preferential Bayesian optimization framework. Our method constructs a virtual decision maker from real-world human driving data to guide parameter sampling. In a simulation experiment, we achieve faster convergence of the prior-knowledge-informed learning procedure compared to existing preferential Bayesian optimization approaches and reduce the number of inadequate driving styles sampled.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge