Exploiting Global Semantic Similarities in Knowledge Graphs by Relational Prototype Entities

Paper and Code

Jun 16, 2022

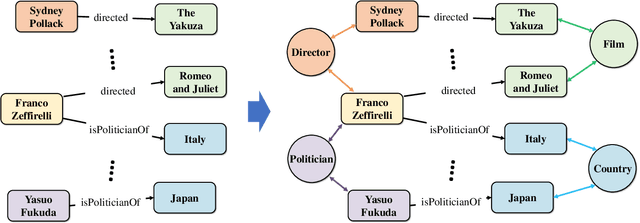

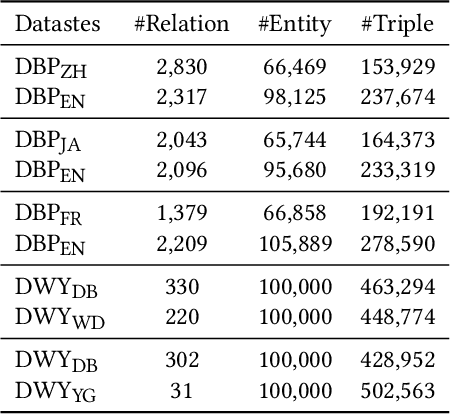

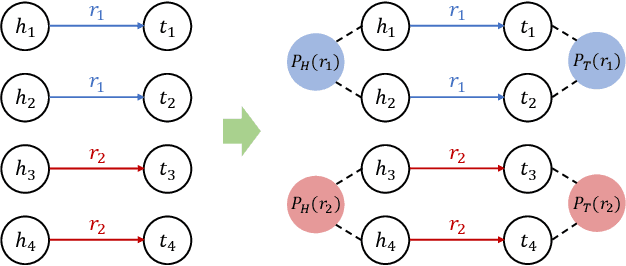

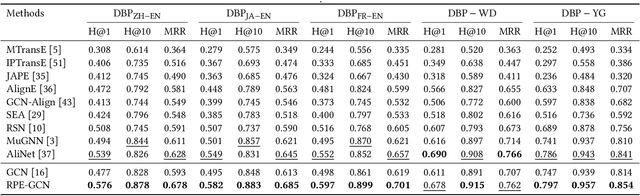

Knowledge graph (KG) embedding aims at learning the latent representations for entities and relations of a KG in continuous vector spaces. An empirical observation is that the head (tail) entities connected by the same relation often share similar semantic attributes -- specifically, they often belong to the same category -- no matter how far away they are from each other in the KG; that is, they share global semantic similarities. However, many existing methods derive KG embeddings based on the local information, which fail to effectively capture such global semantic similarities among entities. To address this challenge, we propose a novel approach, which introduces a set of virtual nodes called \textit{\textbf{relational prototype entities}} to represent the prototypes of the head and tail entities connected by the same relations. By enforcing the entities' embeddings close to their associated prototypes' embeddings, our approach can effectively encourage the global semantic similarities of entities -- that can be far away in the KG -- connected by the same relation. Experiments on the entity alignment and KG completion tasks demonstrate that our approach significantly outperforms recent state-of-the-arts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge