Explain EEG-based End-to-end Deep Learning Models in the Frequency Domain

Paper and Code

Jul 25, 2024

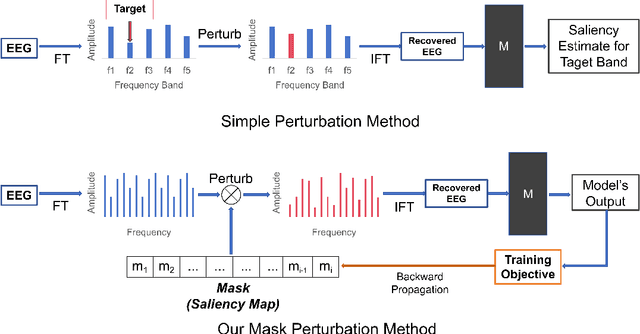

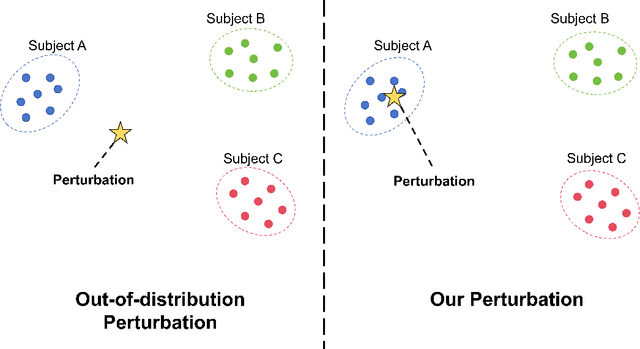

The recent rise of EEG-based end-to-end deep learning models presents a significant challenge in elucidating how these models process raw EEG signals and generate predictions in the frequency domain. This challenge limits the transparency and credibility of EEG-based end-to-end models, hindering their application in security-sensitive areas. To address this issue, we propose a mask perturbation method to explain the behavior of end-to-end models in the frequency domain. Considering the characteristics of EEG data, we introduce a target alignment loss to mitigate the out-of-distribution problem associated with perturbation operations. Additionally, we develop a perturbation generator to define perturbation generation in the frequency domain. Our explanation method is validated through experiments on multiple representative end-to-end deep learning models in the EEG decoding field, using an established EEG benchmark dataset. The results demonstrate the effectiveness and superiority of our method, and highlight its potential to advance research in EEG-based end-to-end models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge