ExpertNet: A Symbiosis of Classification and Clustering

Paper and Code

Jan 17, 2022

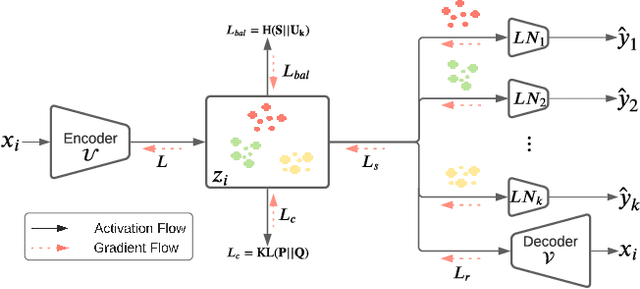

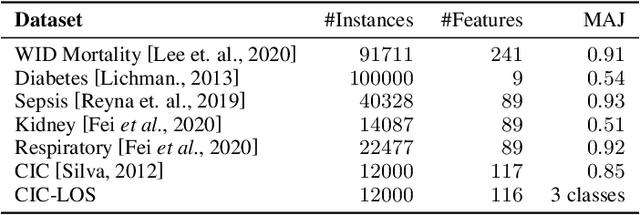

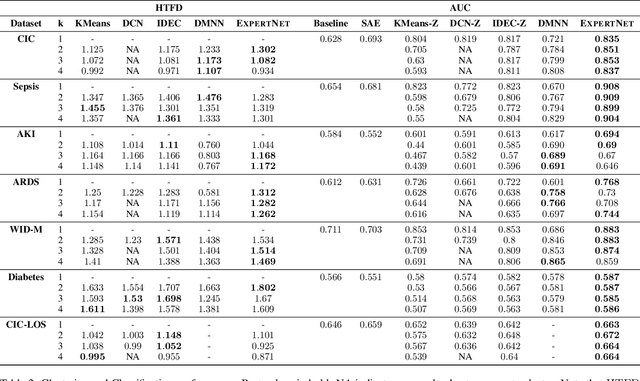

A widely used paradigm to improve the generalization performance of high-capacity neural models is through the addition of auxiliary unsupervised tasks during supervised training. Tasks such as similarity matching and input reconstruction have been shown to provide a beneficial regularizing effect by guiding representation learning. Real data often has complex underlying structures and may be composed of heterogeneous subpopulations that are not learned well with current approaches. In this work, we design ExpertNet, which uses novel training strategies to learn clustered latent representations and leverage them by effectively combining cluster-specific classifiers. We theoretically analyze the effect of clustering on its generalization gap, and empirically show that clustered latent representations from ExpertNet lead to disentangling the intrinsic structure and improvement in classification performance. ExpertNet also meets an important real-world need where classifiers need to be tailored for distinct subpopulations, such as in clinical risk models. We demonstrate the superiority of ExpertNet over state-of-the-art methods on 6 large clinical datasets, where our approach leads to valuable insights on group-specific risks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge