Experience Reuse with Probabilistic Movement Primitives

Paper and Code

Aug 11, 2019

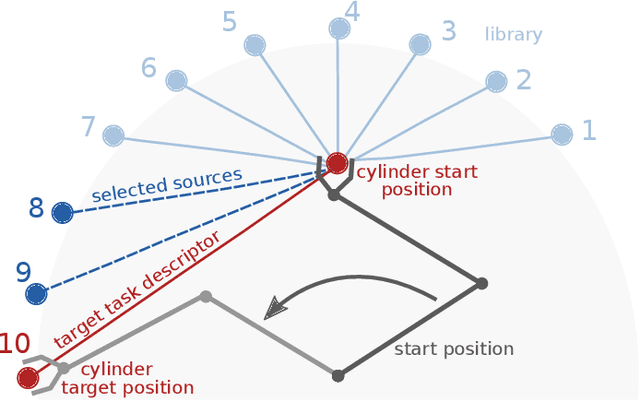

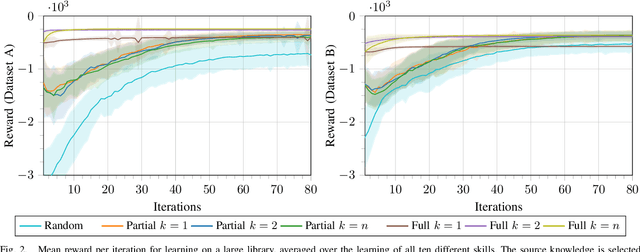

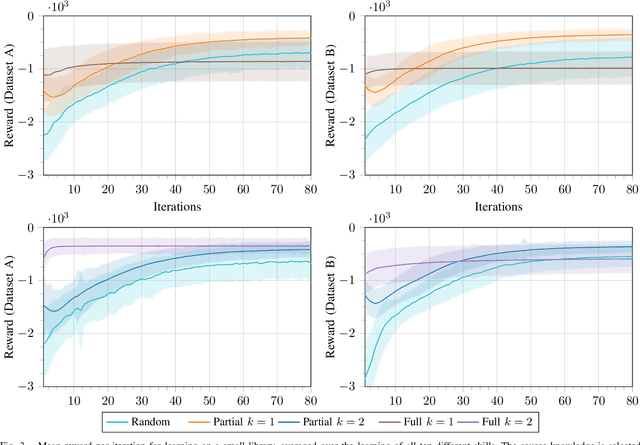

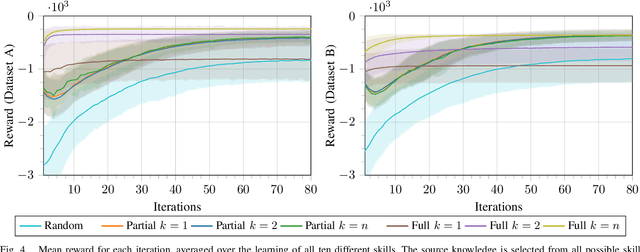

Acquiring new robot motor skills is cumbersome, as learning a skill from scratch and without prior knowledge requires the exploration of a large space of motor configurations. Accordingly, for learning a new task, time could be saved by restricting the parameter search space by initializing it with the solution of a similar task. We present a framework which is able of such knowledge transfer from already learned movement skills to a new learning task. The framework combines probabilistic movement primitives with descriptions of their effects for skill representation. New skills are first initialized with parameters inferred from related movement primitives and thereafter adapted to the new task through relative entropy policy search. We compare two different transfer approaches to initialize the search space distribution with data of known skills with a similar effect. We show the different benefits of the two knowledge transfer approaches on an object pushing task for a simulated 3-DOF robot. We can show that the quality of the learned skills improves and the required iterations to learn a new task can be reduced by more than 60% when past experiences are utilized.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge