Examining and Adapting Time for Multilingual Classification via Mixture of Temporal Experts

Paper and Code

Feb 12, 2025

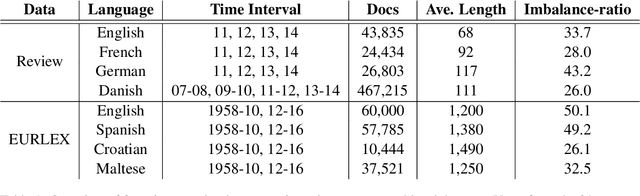

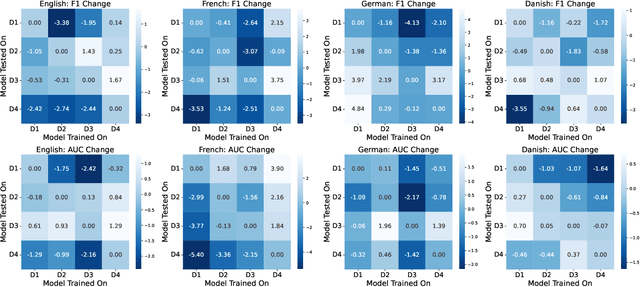

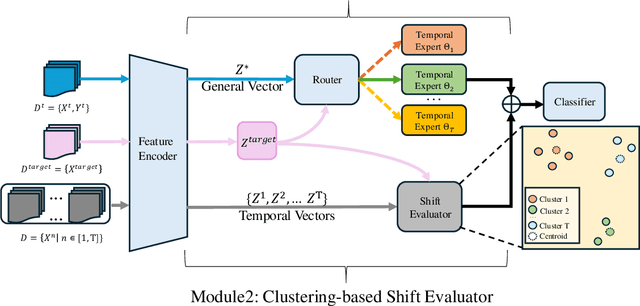

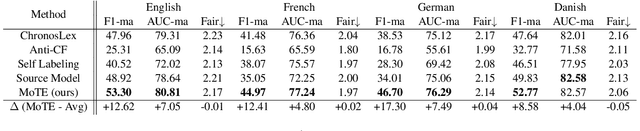

Time is implicitly embedded in classification process: classifiers are usually built on existing data while to be applied on future data whose distributions (e.g., label and token) may change. However, existing state-of-the-art classification models merely consider the temporal variations and primarily focus on English corpora, which leaves temporal studies less explored, let alone under multilingual settings. In this study, we fill the gap by treating time as domains (e.g., 2024 vs. 2025), examining temporal effects, and developing a domain adaptation framework to generalize classifiers over time on multiple languages. Our framework proposes Mixture of Temporal Experts (MoTE) to leverage both semantic and data distributional shifts to learn and adapt temporal trends into classification models. Our analysis shows classification performance varies over time across different languages, and we experimentally demonstrate that MoTE can enhance classifier generalizability over temporal data shifts. Our study provides analytic insights and addresses the need for time-aware models that perform robustly in multilingual scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge