Evaluation of 3D GANs for Lung Tissue Modelling in Pulmonary CT

Paper and Code

Aug 17, 2022

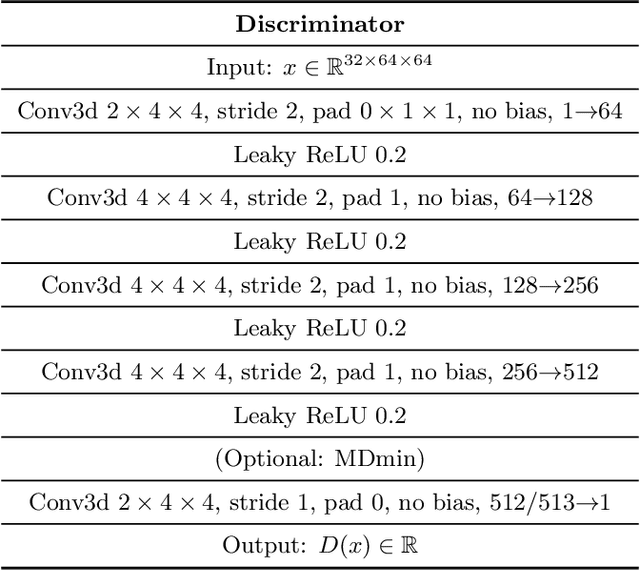

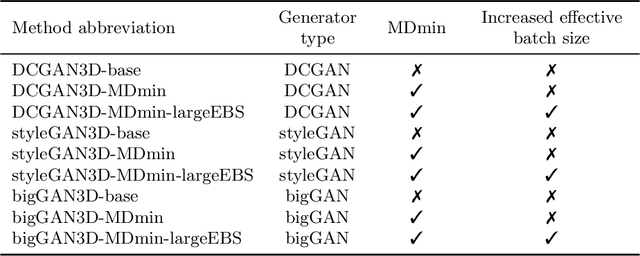

GANs are able to model accurately the distribution of complex, high-dimensional datasets, e.g. images. This makes high-quality GANs useful for unsupervised anomaly detection in medical imaging. However, differences in training datasets such as output image dimensionality and appearance of semantically meaningful features mean that GAN models from the natural image domain may not work `out-of-the-box' for medical imaging, necessitating re-implementation and re-evaluation. In this work we adapt and evaluate three GAN models to the task of modelling 3D healthy image patches for pulmonary CT. To the best of our knowledge, this is the first time that such an evaluation has been performed. The DCGAN, styleGAN and the bigGAN architectures were investigated due to their ubiquity and high performance in natural image processing. We train different variants of these methods and assess their performance using the FID score. In addition, the quality of the generated images was evaluated by a human observer study, the ability of the networks to model 3D domain-specific features was investigated, and the structure of the GAN latent spaces was analysed. Results show that the 3D styleGAN produces realistic-looking images with meaningful 3D structure, but suffer from mode collapse which must be addressed during training to obtain samples diversity. Conversely, the 3D DCGAN models show a greater capacity for image variability, but at the cost of poor-quality images. The 3D bigGAN models provide an intermediate level of image quality, but most accurately model the distribution of selected semantically meaningful features. The results suggest that future development is required to realise a 3D GAN with sufficient capacity for patch-based lung CT anomaly detection and we offer recommendations for future areas of research, such as experimenting with other architectures and incorporation of position-encoding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge