Evaluating the Utility of Model Explanations for Model Development

Paper and Code

Dec 10, 2023

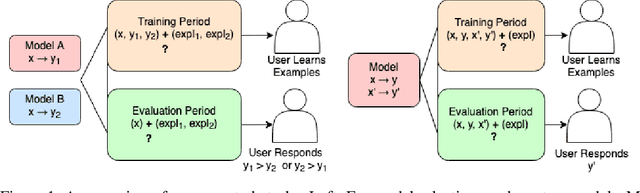

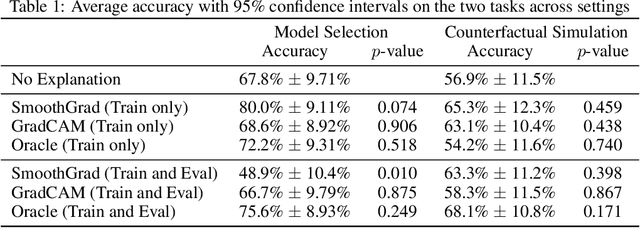

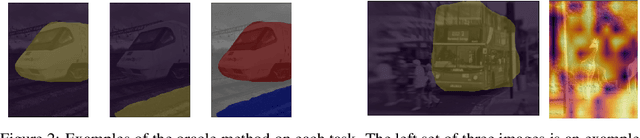

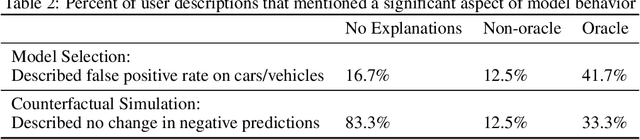

One of the motivations for explainable AI is to allow humans to make better and more informed decisions regarding the use and deployment of AI models. But careful evaluations are needed to assess whether this expectation has been fulfilled. Current evaluations mainly focus on algorithmic properties of explanations, and those that involve human subjects often employ subjective questions to test human's perception of explanation usefulness, without being grounded in objective metrics and measurements. In this work, we evaluate whether explanations can improve human decision-making in practical scenarios of machine learning model development. We conduct a mixed-methods user study involving image data to evaluate saliency maps generated by SmoothGrad, GradCAM, and an oracle explanation on two tasks: model selection and counterfactual simulation. To our surprise, we did not find evidence of significant improvement on these tasks when users were provided with any of the saliency maps, even the synthetic oracle explanation designed to be simple to understand and highly indicative of the answer. Nonetheless, explanations did help users more accurately describe the models. These findings suggest caution regarding the usefulness and potential for misunderstanding in saliency-based explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge