Evaluating Progress on Machine Learning for Longitudinal Electronic Healthcare Data

Paper and Code

Oct 02, 2020

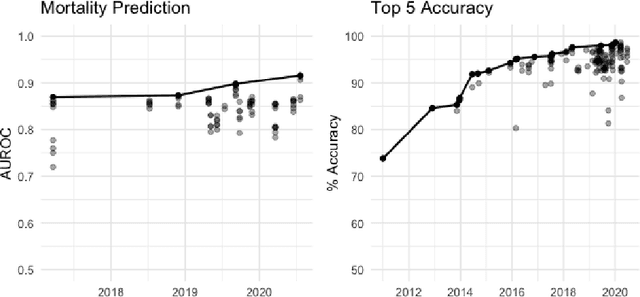

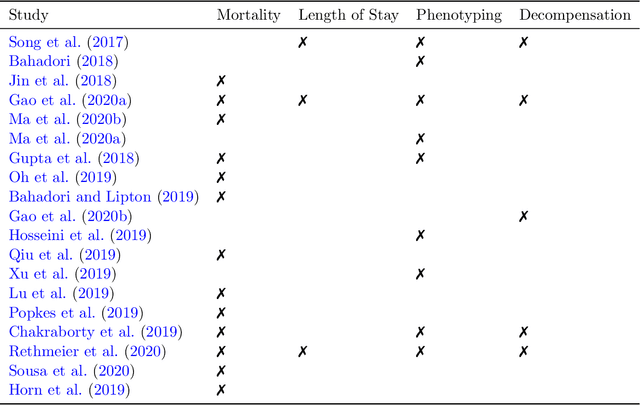

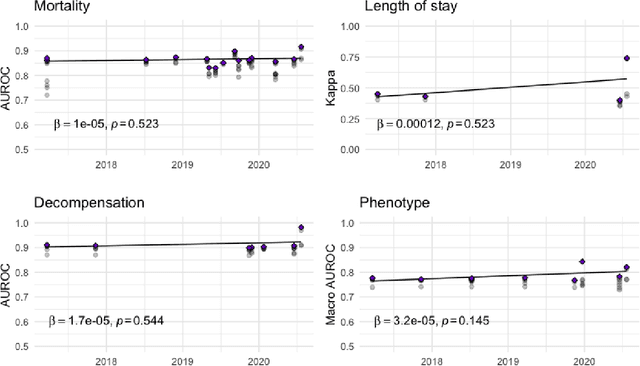

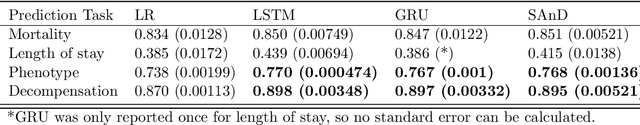

The Large Scale Visual Recognition Challenge based on the well-known Imagenet dataset catalyzed an intense flurry of progress in computer vision. Benchmark tasks have propelled other sub-fields of machine learning forward at an equally impressive pace, but in healthcare it has primarily been image processing tasks, such as in dermatology and radiology, that have experienced similar benchmark-driven progress. In the present study, we performed a comprehensive review of benchmarks in medical machine learning for structured data, identifying one based on the Medical Information Mart for Intensive Care (MIMIC-III) that allows the first direct comparison of predictive performance and thus the evaluation of progress on four clinical prediction tasks: mortality, length of stay, phenotyping, and patient decompensation. We find that little meaningful progress has been made over a 3 year period on these tasks, despite significant community engagement. Through our meta-analysis, we find that the performance of deep recurrent models is only superior to logistic regression on certain tasks. We conclude with a synthesis of these results, possible explanations, and a list of desirable qualities for future benchmarks in medical machine learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge