Estimator Selection: End-Performance Metric Aspects

Paper and Code

Jul 26, 2015

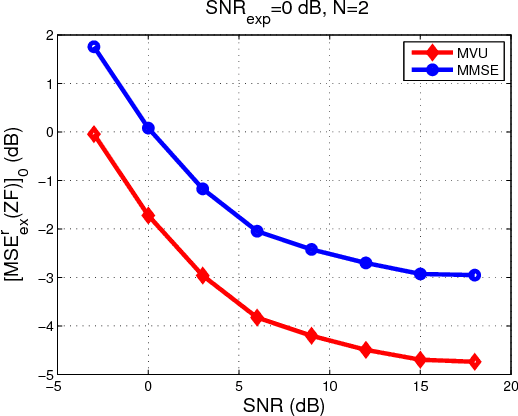

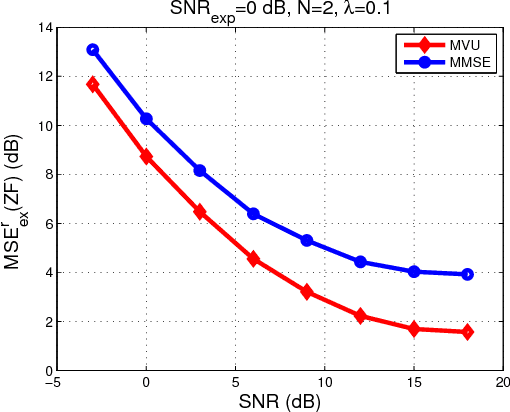

Recently, a framework for application-oriented optimal experiment design has been introduced. In this context, the distance of the estimated system from the true one is measured in terms of a particular end-performance metric. This treatment leads to superior unknown system estimates to classical experiment designs based on usual pointwise functional distances of the estimated system from the true one. The separation of the system estimator from the experiment design is done within this new framework by choosing and fixing the estimation method to either a maximum likelihood (ML) approach or a Bayesian estimator such as the minimum mean square error (MMSE). Since the MMSE estimator delivers a system estimate with lower mean square error (MSE) than the ML estimator for finite-length experiments, it is usually considered the best choice in practice in signal processing and control applications. Within the application-oriented framework a related meaningful question is: Are there end-performance metrics for which the ML estimator outperforms the MMSE when the experiment is finite-length? In this paper, we affirmatively answer this question based on a simple linear Gaussian regression example.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge