Estimation of Utility-Maximizing Bounds on Potential Outcomes

Paper and Code

Oct 10, 2019

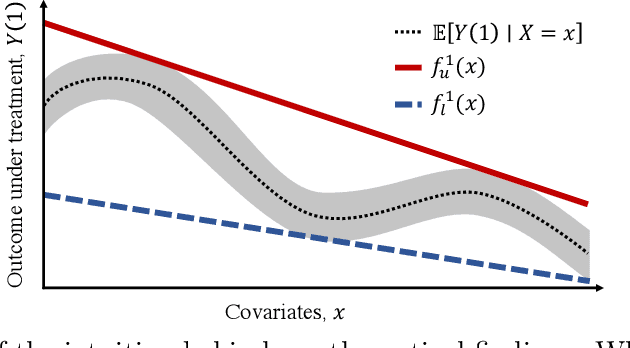

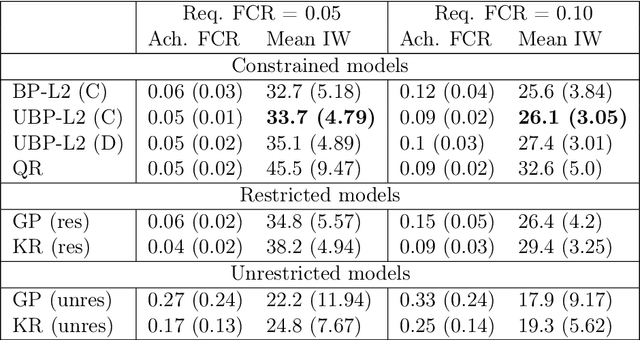

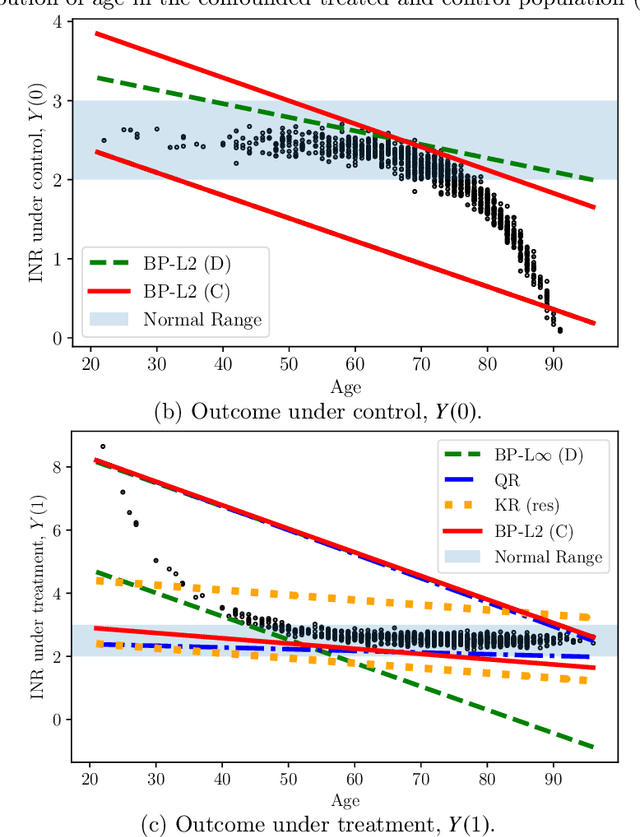

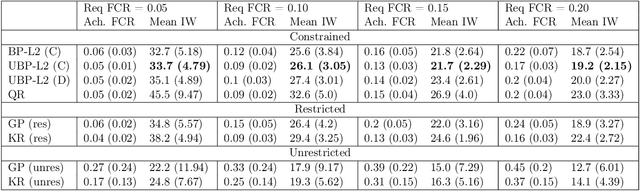

Estimation of individual treatment effects is often used as the basis for contextual decision making in fields such as healthcare, education, and economics. However, in many real-world applications it is sufficient for the decision maker to have upper and lower bounds on the potential outcomes of decision alternatives, allowing them to evaluate the trade-off between benefit and risk. With this in mind, we develop an algorithm for directly learning upper and lower bounds on the potential outcomes under treatment and non-treatment. Our theoretical analysis highlights a trade-off between the complexity of the learning task and the confidence with which the resulting bounds cover the true potential outcomes; the more confident we wish to be, the more complex the learning task is. We suggest a novel algorithm that maximizes a utility function while maintaining valid potential outcome bounds. We illustrate different properties of our algorithm, and highlight how it can be used to guide decision making using two semi-simulated datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge