Estimation of stellar atmospheric parameters from LAMOST DR8 low-resolution spectra with 20$\leq$SNR$<$30

Paper and Code

Apr 13, 2022

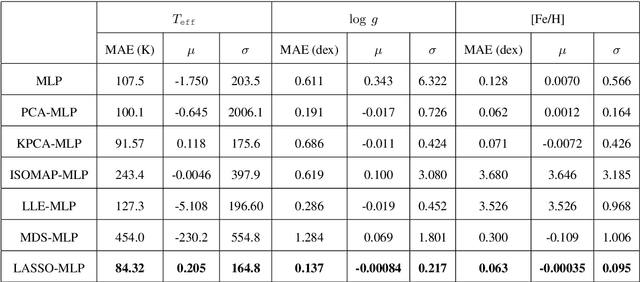

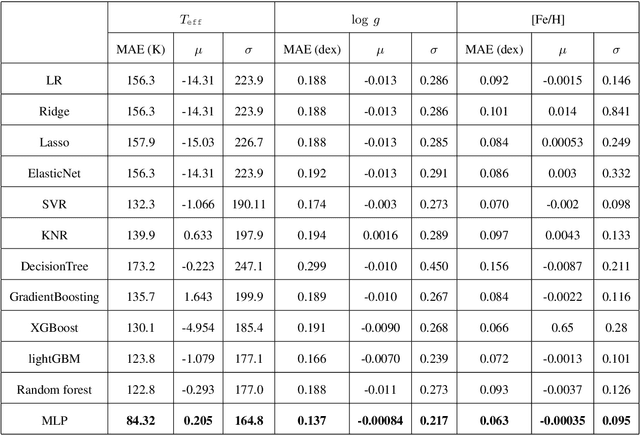

The accuracy of the estimated stellar atmospheric parameter decreases evidently with the decreasing of spectral signal-to-noise ratio (SNR) and there are a huge amount of this kind observations, especially in case of SNR$<$30. Therefore, it is helpful to improve the parameter estimation performance for these spectra and this work studied the ($T_\texttt{eff}, \log~g$, [Fe/H]) estimation problem for LAMOST DR8 low-resolution spectra with 20$\leq$SNR$<$30. We proposed a data-driven method based on machine learning techniques. Firstly, this scheme detected stellar atmospheric parameter-sensitive features from spectra by the Least Absolute Shrinkage and Selection Operator (LASSO), rejected ineffective data components and irrelevant data. Secondly, a Multi-layer Perceptron (MLP) method was used to estimate stellar atmospheric parameters from the LASSO features. Finally, the performance of the LASSO-MLP was evaluated by computing and analyzing the consistency between its estimation and the reference from the APOGEE (Apache Point Observatory Galactic Evolution Experiment) high-resolution spectra. Experiments show that the Mean Absolute Errors (MAE) of $T_\texttt{eff}, \log~g$, [Fe/H] are reduced from the LASP (137.6 K, 0.195 dex, 0.091 dex) to LASSO-MLP (84.32 K, 0.137 dex, 0.063 dex), which indicate evident improvements on stellar atmospheric parameter estimation. In addition, this work estimated the stellar atmospheric parameters for 1,162,760 low-resolution spectra with 20$\leq$SNR$<$30 from LAMOST DR8 using LASSO-MLP, and released the estimation catalog, learned model, experimental code, trained model, training data and test data for scientific exploration and algorithm study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge