Estimation of entropy-regularized optimal transport maps between non-compactly supported measures

Paper and Code

Nov 20, 2023

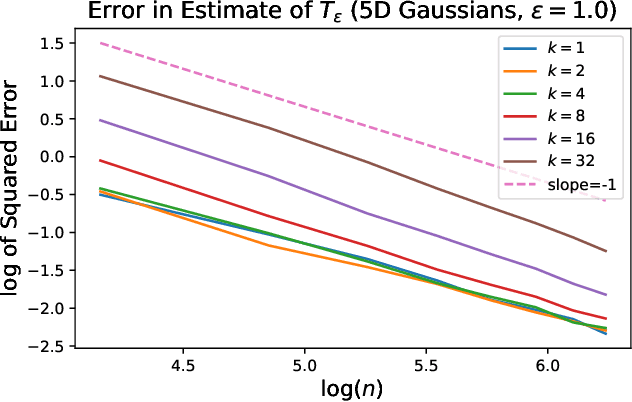

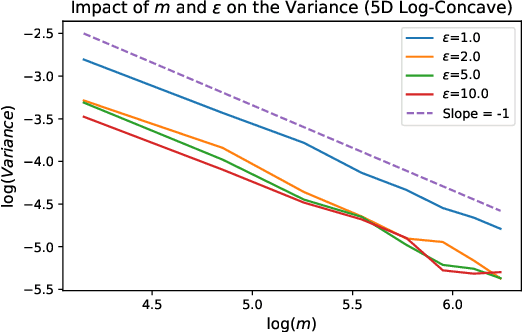

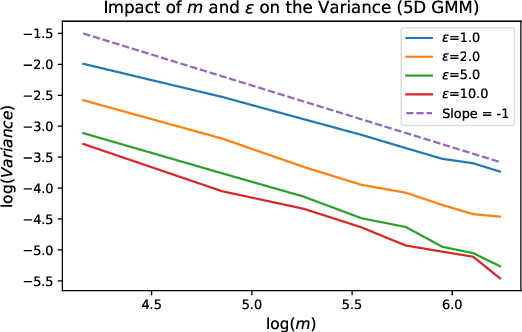

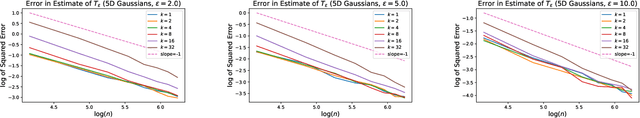

This paper addresses the problem of estimating entropy-regularized optimal transport (EOT) maps with squared-Euclidean cost between source and target measures that are subGaussian. In the case that the target measure is compactly supported or strongly log-concave, we show that for a recently proposed in-sample estimator, the expected squared $L^2$-error decays at least as fast as $O(n^{-1/3})$ where $n$ is the sample size. For the general subGaussian case we show that the expected $L^1$-error decays at least as fast as $O(n^{-1/6})$, and in both cases we have polynomial dependence on the regularization parameter. While these results are suboptimal compared to known results in the case of compactness of both the source and target measures (squared $L^2$-error converging at a rate $O(n^{-1})$) and for when the source is subGaussian while the target is compactly supported (squared $L^2$-error converging at a rate $O(n^{-1/2})$), their importance lie in eliminating the compact support requirements. The proof technique makes use of a bias-variance decomposition where the variance is controlled using standard concentration of measure results and the bias is handled by T1-transport inequalities along with sample complexity results in estimation of EOT cost under subGaussian assumptions. Our experimental results point to a looseness in controlling the variance terms and we conclude by posing several open problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge