Estimating Training Data Influence by Tracking Gradient Descent

Paper and Code

Feb 19, 2020

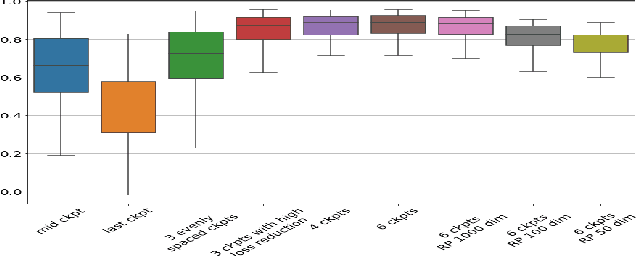

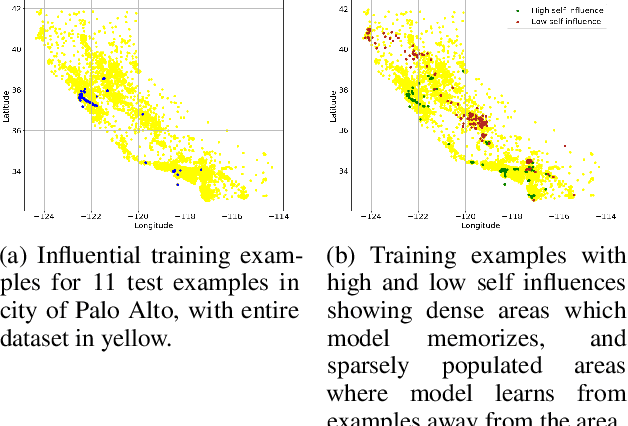

We introduce a method called TrackIn that computes the influence of a training example on a prediction made by the model, by tracking how the loss on the test point changes during the training process whenever the training example of interest was utilized. We provide a scalable implementation of TrackIn via a combination of a few key ideas: (a) a first-order approximation to the exact computation, (b) using random projections to speed up the computation of the first-order approximation for large models, (c) using saved checkpoints of standard training procedures, and (d) cherry-picking layers of a deep neural network. An experimental evaluation shows that TrackIn is more effective in identifying mislabelled training examples than other related methods such as influence functions and representer points. We also discuss insights from applying the method on vision, regression and natural language tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge