Estimating Mutual Information for Discrete-Continuous Mixtures

Paper and Code

Oct 09, 2018

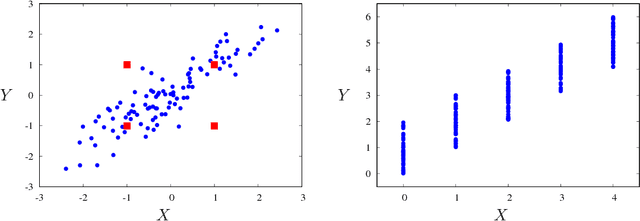

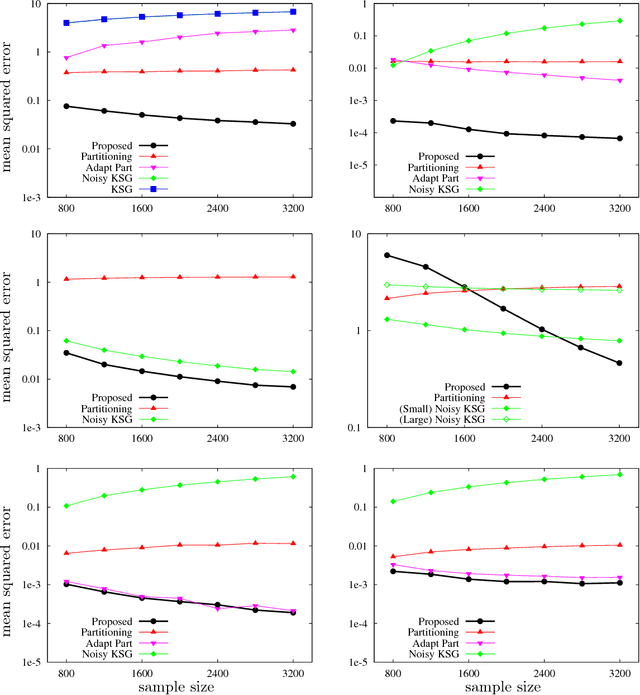

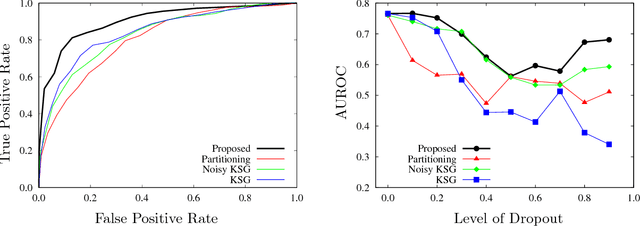

Estimating mutual information from observed samples is a basic primitive, useful in several machine learning tasks including correlation mining, information bottleneck clustering, learning a Chow-Liu tree, and conditional independence testing in (causal) graphical models. While mutual information is a well-defined quantity in general probability spaces, existing estimators can only handle two special cases of purely discrete or purely continuous pairs of random variables. The main challenge is that these methods first estimate the (differential) entropies of X, Y and the pair (X;Y) and add them up with appropriate signs to get an estimate of the mutual information. These 3H-estimators cannot be applied in general mixture spaces, where entropy is not well-defined. In this paper, we design a novel estimator for mutual information of discrete-continuous mixtures. We prove that the proposed estimator is consistent. We provide numerical experiments suggesting superiority of the proposed estimator compared to other heuristics of adding small continuous noise to all the samples and applying standard estimators tailored for purely continuous variables, and quantizing the samples and applying standard estimators tailored for purely discrete variables. This significantly widens the applicability of mutual information estimation in real-world applications, where some variables are discrete, some continuous, and others are a mixture between continuous and discrete components.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge