Escaping Stochastic Traps with Aleatoric Mapping Agents

Paper and Code

Feb 08, 2021

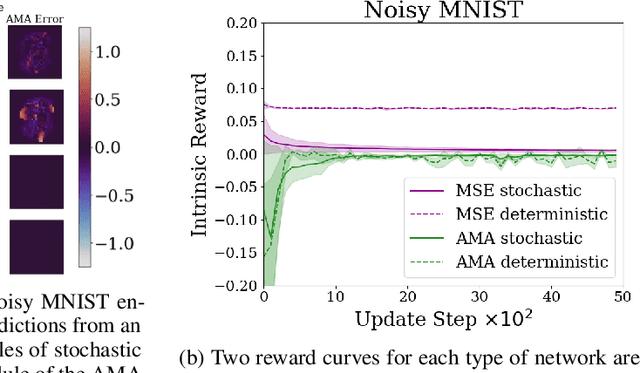

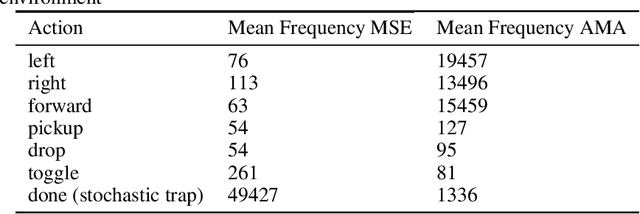

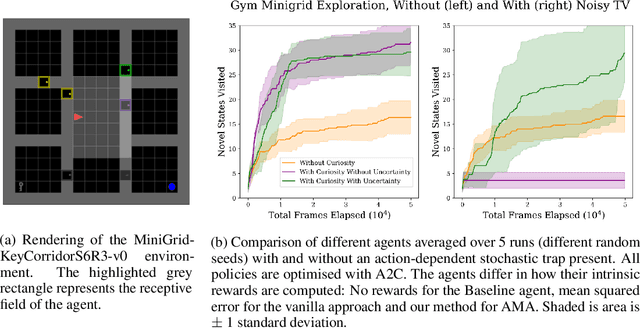

Exploration in environments with sparse rewards is difficult for artificial agents. Curiosity driven learning -- using feed-forward prediction errors as intrinsic rewards -- has achieved some success in these scenarios, but fails when faced with action-dependent noise sources. We present aleatoric mapping agents (AMAs), a neuroscience inspired solution modeled on the cholinergic system of the mammalian brain. AMAs aim to explicitly ascertain which dynamics of the environment are unpredictable, regardless of whether those dynamics are induced by the actions of the agent. This is achieved by generating separate forward predictions for the mean and variance of future states and reducing intrinsic rewards for those transitions with high aleatoric variance. We show AMAs are able to effectively circumvent action-dependent stochastic traps that immobilise conventional curiosity driven agents. The code for all experiments presented in this paper is open sourced: http://github.com/self-supervisor/Escaping-Stochastic-Traps-With-Aleatoric-Mapping-Agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge