Equitable-FL: Federated Learning with Sparsity for Resource-Constrained Environment

Paper and Code

Sep 02, 2023

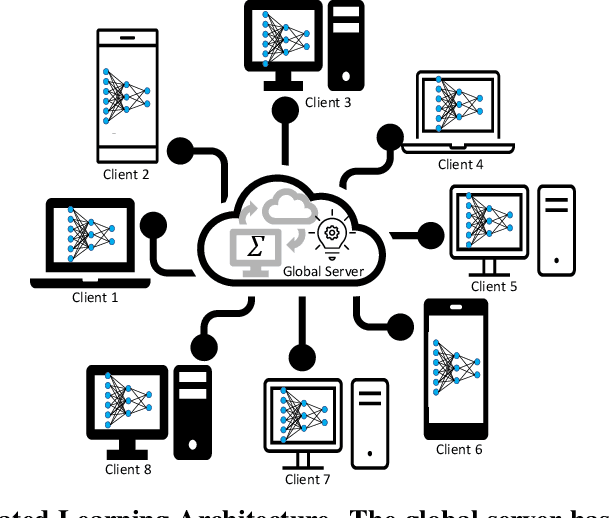

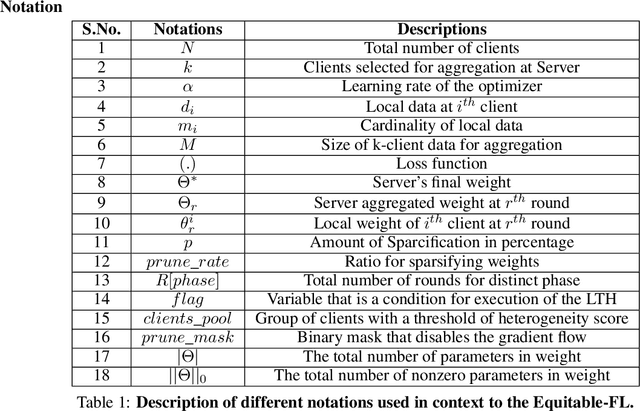

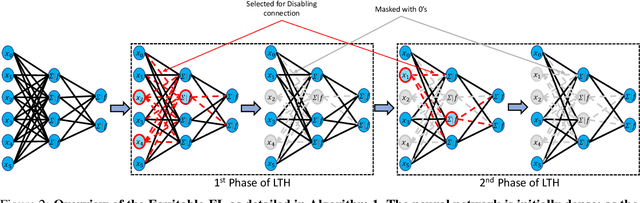

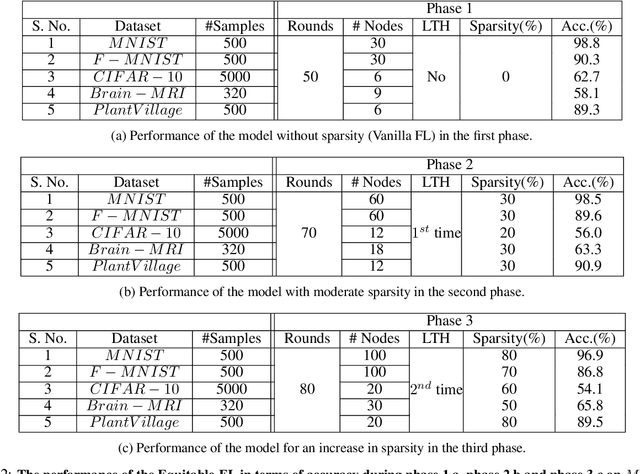

In Federated Learning, model training is performed across multiple computing devices, where only parameters are shared with a common central server without exchanging their data instances. This strategy assumes abundance of resources on individual clients and utilizes these resources to build a richer model as user's models. However, when the assumption of the abundance of resources is violated, learning may not be possible as some nodes may not be able to participate in the process. In this paper, we propose a sparse form of federated learning that performs well in a Resource Constrained Environment. Our goal is to make learning possible, regardless of a node's space, computing, or bandwidth scarcity. The method is based on the observation that model size viz a viz available resources defines resource scarcity, which entails that reduction of the number of parameters without affecting accuracy is key to model training in a resource-constrained environment. In this work, the Lottery Ticket Hypothesis approach is utilized to progressively sparsify models to encourage nodes with resource scarcity to participate in collaborative training. We validate Equitable-FL on the $MNIST$, $F-MNIST$, and $CIFAR-10$ benchmark datasets, as well as the $Brain-MRI$ data and the $PlantVillage$ datasets. Further, we examine the effect of sparsity on performance, model size compaction, and speed-up for training. Results obtained from experiments performed for training convolutional neural networks validate the efficacy of Equitable-FL in heterogeneous resource-constrained learning environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge