Ensemble perspective for understanding temporal credit assignment

Paper and Code

Feb 07, 2021

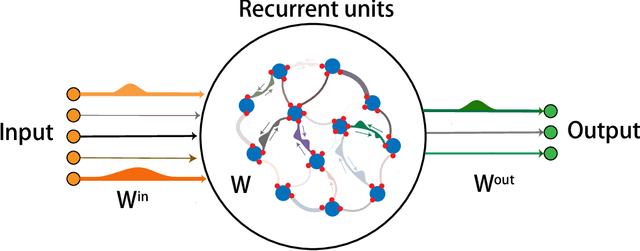

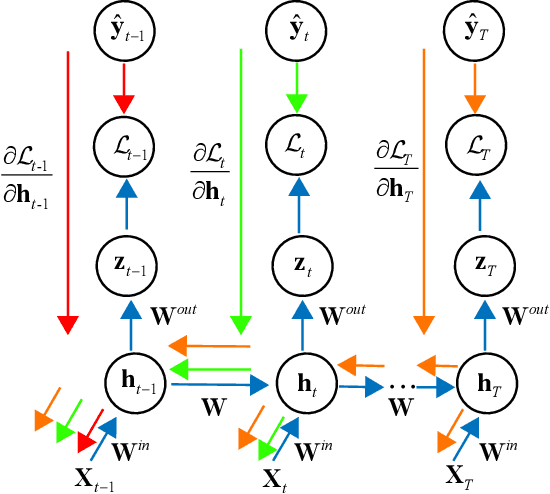

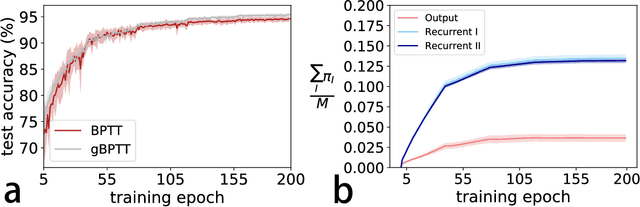

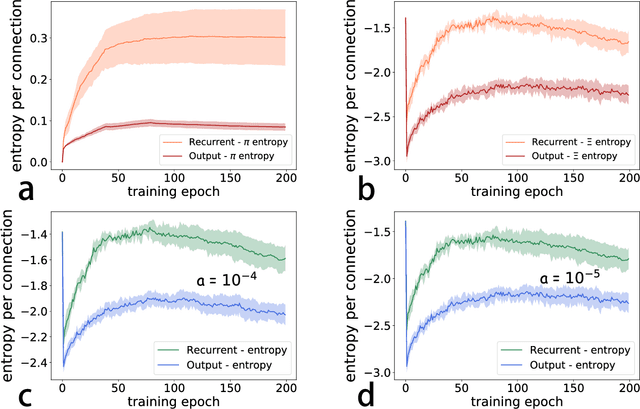

Recurrent neural networks are widely used for modeling spatio-temporal sequences in both nature language processing and neural population dynamics. However, understanding the temporal credit assignment is hard. Here, we propose that each individual connection in the recurrent computation is modeled by a spike and slab distribution, rather than a precise weight value. We then derive the mean-field algorithm to train the network at the ensemble level. The method is then applied to classify handwritten digits when pixels are read in sequence, and to the multisensory integration task that is a fundamental cognitive function of animals. Our model reveals important connections that determine the overall performance of the network. The model also shows how spatio-temporal information is processed through the hyperparameters of the distribution, and moreover reveals distinct types of emergent neural selectivity. It is thus promising to study the temporal credit assignment in recurrent neural networks from the ensemble perspective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge