Enhancing LMMSE Performance with Modest Complexity Increase via Neural Network Equalizers

Paper and Code

Nov 03, 2024

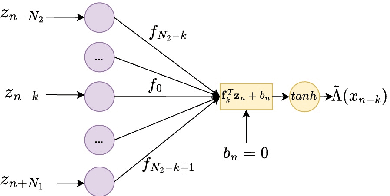

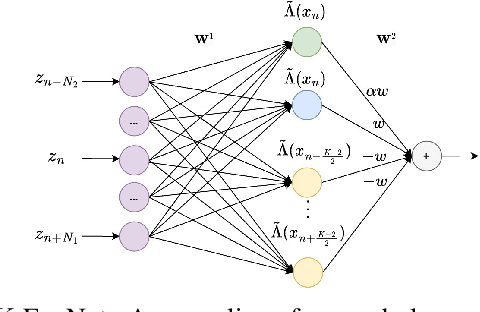

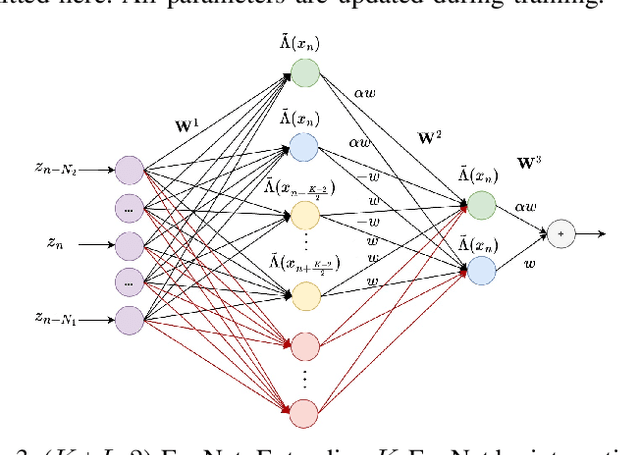

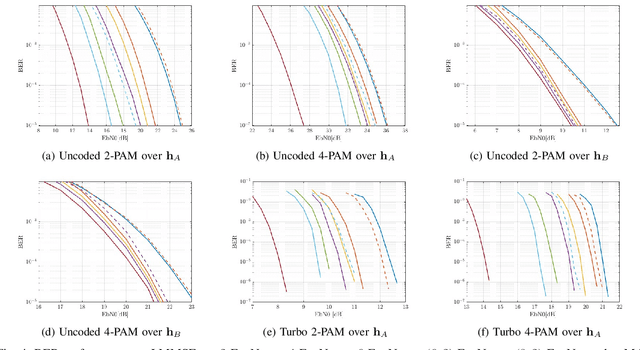

The BCJR algorithm is renowned for its optimal equalization, minimizing bit error rate (BER) over intersymbol interference (ISI) channels. However, its complexity grows exponentially with the channel memory, posing a significant computational burden. In contrast, the linear minimum mean square error (LMMSE) equalizer offers a notably simpler solution, albeit with reduced performance compared to the BCJR. Recently, Neural Network (NN) based equalizers have emerged as promising alternatives. Trained to map observations to the original transmitted symbols, these NNs demonstrate performance similar to the BCJR algorithm. However, they often entail a high number of learnable parameters, resulting in complexities comparable to or even larger than the BCJR. This paper explores the potential of NN-based equalization with a reduced number of learnable parameters and low complexity. We introduce a NN equalizer with complexity comparable to LMMSE, surpassing LMMSE performance and achieving a modest performance gap from the BCJR equalizer. A significant challenge with NNs featuring a limited parameter count is their susceptibility to converging to local minima, leading to suboptimal performance. To address this challenge, we propose a novel NN equalizer architecture with a unique initialization approach based on LMMSE. This innovative method effectively overcomes optimization challenges and enhances LMMSE performance, applicable both with and without turbo decoding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge