Enhancing Group Fairness in Federated Learning through Personalization

Paper and Code

Jul 27, 2024

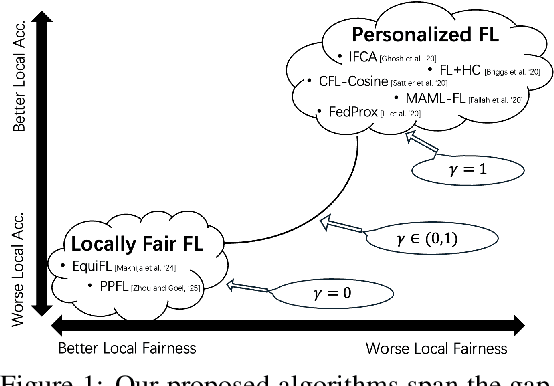

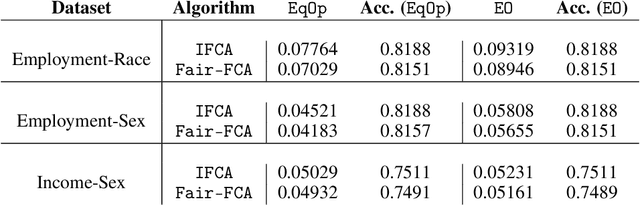

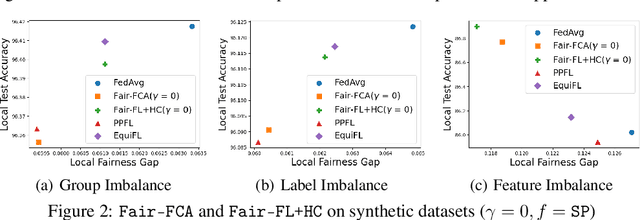

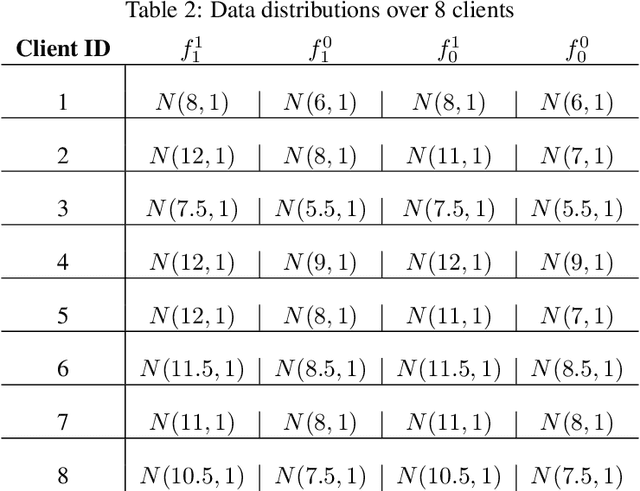

Personalized Federated Learning (FL) algorithms collaboratively train customized models for each client, enhancing the accuracy of the learned models on the client's local data (e.g., by clustering similar clients, or by fine-tuning models locally). In this paper, we investigate the impact of such personalization techniques on the group fairness of the learned models, and show that personalization can also lead to improved (local) fairness as an unintended benefit. We begin by illustrating these benefits of personalization through numerical experiments comparing two classes of personalized FL algorithms (clustering and fine-tuning) against a baseline FedAvg algorithm, elaborating on the reasons behind improved fairness using personalized FL, and then providing analytical support. Motivated by these, we further propose a new, Fairness-aware Federated Clustering Algorithm, Fair-FCA, in which clients can be clustered to obtain a (tuneable) fairness-accuracy tradeoff. Through numerical experiments, we demonstrate the ability of Fair-FCA to strike a balance between accuracy and fairness at the client level.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge