Enhancing Generative Models via Quantum Correlations

Paper and Code

Jan 20, 2021

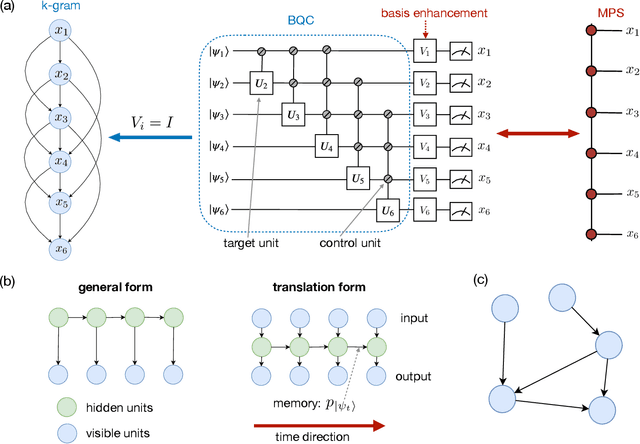

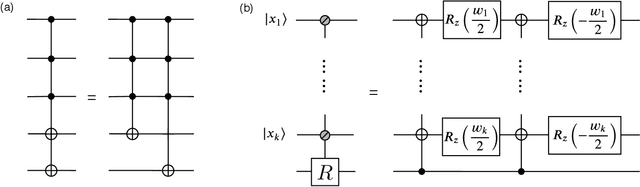

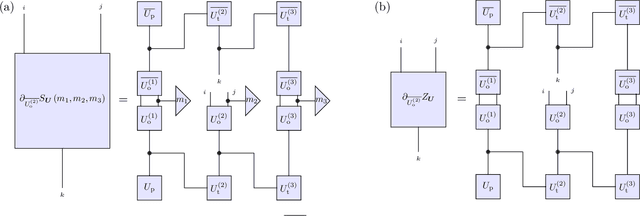

Generative modeling using samples drawn from the probability distribution constitutes a powerful approach for unsupervised machine learning. Quantum mechanical systems can produce probability distributions that exhibit quantum correlations which are difficult to capture using classical models. We show theoretically that such quantum correlations provide a powerful resource for generative modeling. In particular, we provide an unconditional proof of separation in expressive power between a class of widely-used generative models, known as Bayesian networks, and its minimal quantum extension. We show that this expressivity advantage is associated with quantum nonlocality and quantum contextuality. Furthermore, we numerically test this separation on standard machine learning data sets and show that it holds for practical problems. The possibility of quantum advantage demonstrated in this work not only sheds light on the design of useful quantum machine learning protocols but also provides inspiration to draw on ideas from quantum foundations to improve purely classical algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge