Enhanced Memory Network: The novel network structure for Symbolic Music Generation

Paper and Code

Oct 07, 2021

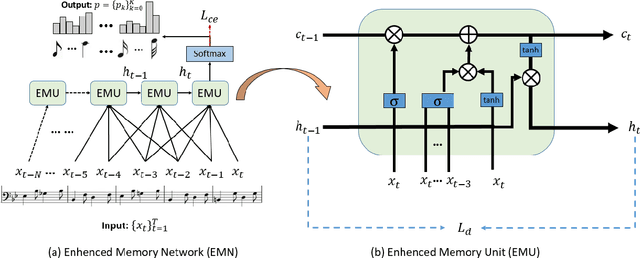

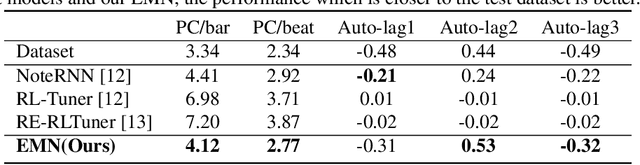

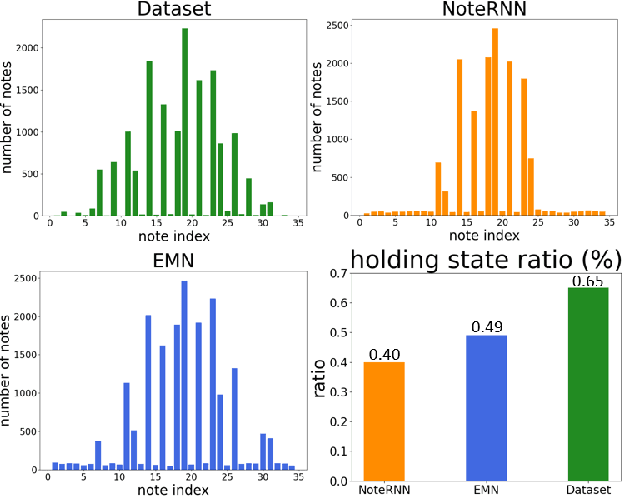

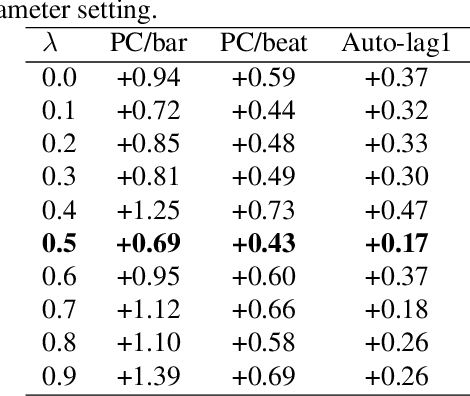

Symbolic melodies generation is one of the essential tasks for automatic music generation. Recently, models based on neural networks have had a significant influence on generating symbolic melodies. However, the musical context structure is complicated to capture through deep neural networks. Although long short-term memory (LSTM) is attempted to solve this problem through learning order dependence in the musical sequence, it is not capable of capturing musical context with only one note as input for each time step of LSTM. In this paper, we propose a novel Enhanced Memory Network (EMN) with several recurrent units, named Enhanced Memory Unit (EMU), to explicitly modify the internal architecture of LSTM for containing music beat information and reinforces the memory of the latest musical beat through aggregating beat inside the memory gate. In addition, to increase the diversity of generated musical notes, cosine distance among adjacent time steps of hidden states is considered as part of loss functions to avoid a high similarity score that harms the diversity of generated notes. Objective and subjective evaluation results show that the proposed method achieves state-of-the-art performance. Code and music demo are available at https://github.com/qrqrqrqr/EMU

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge