Energy Drain of the Object Detection Processing Pipeline for Mobile Devices: Analysis and Implications

Paper and Code

Nov 26, 2020

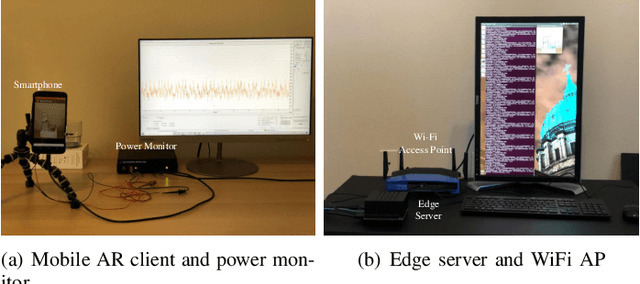

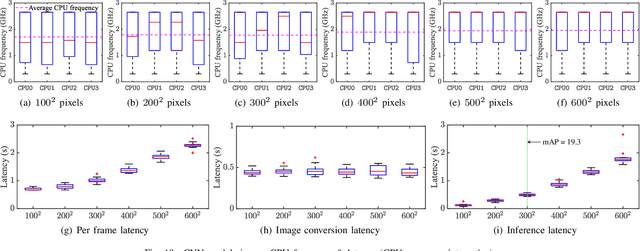

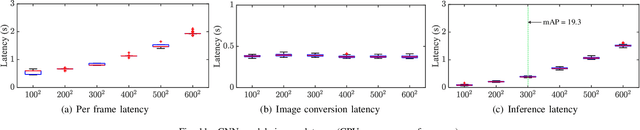

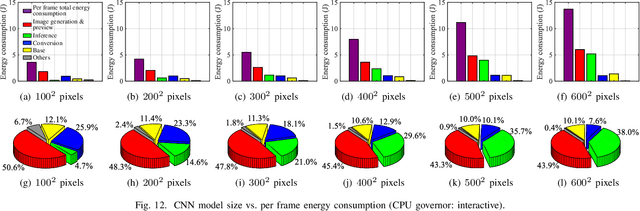

Applying deep learning to object detection provides the capability to accurately detect and classify complex objects in the real world. However, currently, few mobile applications use deep learning because such technology is computation-intensive and energy-consuming. This paper, to the best of our knowledge, presents the first detailed experimental study of a mobile augmented reality (AR) client's energy consumption and the detection latency of executing Convolutional Neural Networks (CNN) based object detection, either locally on the smartphone or remotely on an edge server. In order to accurately measure the energy consumption on the smartphone and obtain the breakdown of energy consumed by each phase of the object detection processing pipeline, we propose a new measurement strategy. Our detailed measurements refine the energy analysis of mobile AR clients and reveal several interesting perspectives regarding the energy consumption of executing CNN-based object detection. Furthermore, several insights and research opportunities are proposed based on our experimental results. These findings from our experimental study will guide the design of energy-efficient processing pipeline of CNN-based object detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge