End-to-End Image Compression with Probabilistic Decoding

Paper and Code

Sep 30, 2021

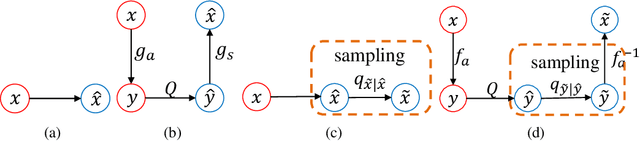

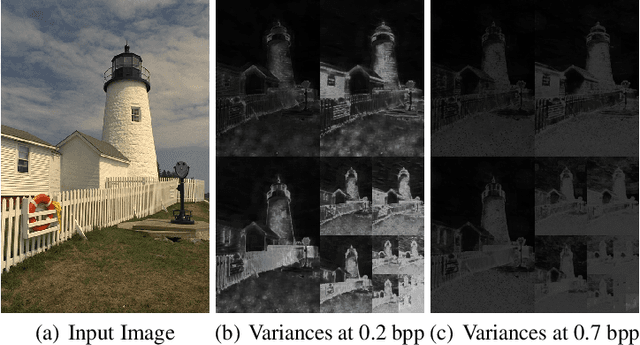

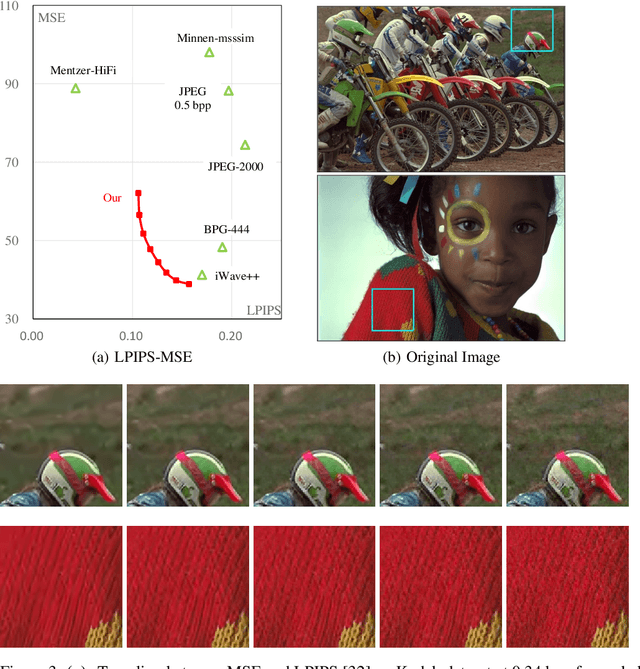

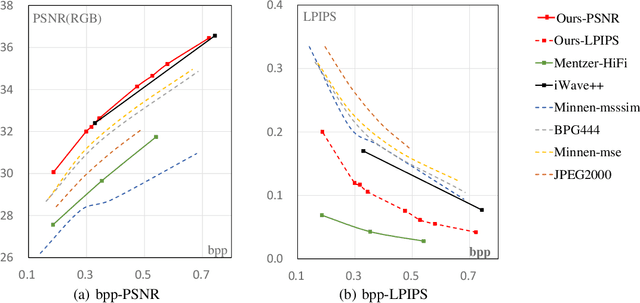

Lossy image compression is a many-to-one process, thus one bitstream corresponds to multiple possible original images, especially at low bit rates. However, this nature was seldom considered in previous studies on image compression, which usually chose one possible image as reconstruction, e.g. the one with the maximal a posteriori probability. We propose a learned image compression framework to natively support probabilistic decoding. The compressed bitstream is decoded into a series of parameters that instantiate a pre-chosen distribution; then the distribution is used by the decoder to sample and reconstruct images. The decoder may adopt different sampling strategies and produce diverse reconstructions, among which some have higher signal fidelity and some others have better visual quality. The proposed framework is dependent on a revertible neural network-based transform to convert pixels into coefficients that obey the pre-chosen distribution as much as possible. Our code and models will be made publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge