Emotional Embeddings: Refining Word Embeddings to Capture Emotional Content of Words

Paper and Code

May 31, 2019

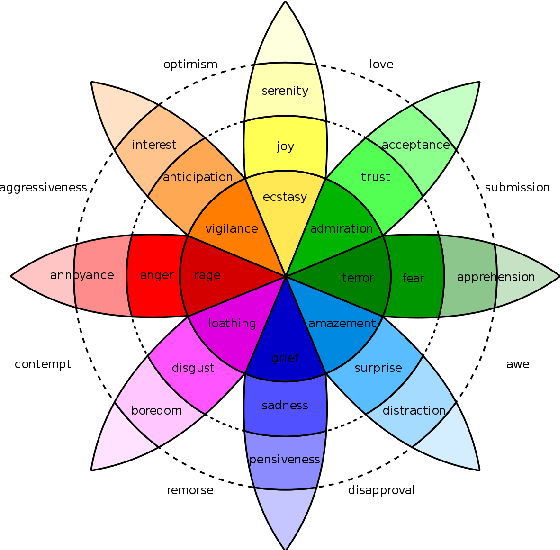

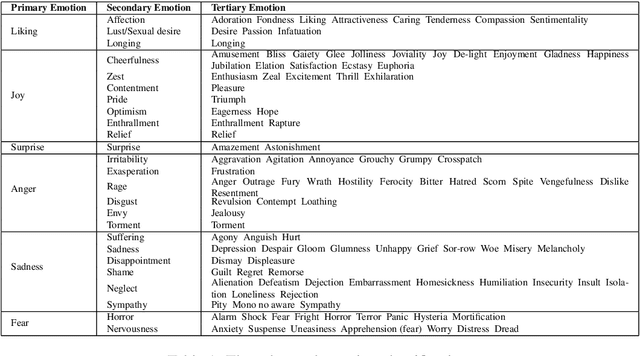

Word embeddings are one of the most useful tools in any modern natural language processing expert's toolkit. They contain various types of information about each word which makes them the best way to represent the terms in any NLP task. But there are some types of information that cannot be learned by these models. Emotional information of words are one of those. In this paper, we present an approach to incorporate emotional information of words into these models. We accomplish this by adding a secondary training stage which uses an emotional lexicon and a psychological model of basic emotions. We show that fitting an emotional model into pre-trained word vectors can increase the performance of these models in emotional similarity metrics. Retrained models perform better than their original counterparts from 13% improvement for Word2Vec model, to 29% for GloVe vectors. This is the first such model presented in the literature, and although preliminary, these emotion sensitive models can open the way to increase performance in variety of emotion detection techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge