Emergence of the SVD as an interpretable factorization in deep learning for inverse problems

Paper and Code

Jan 18, 2023

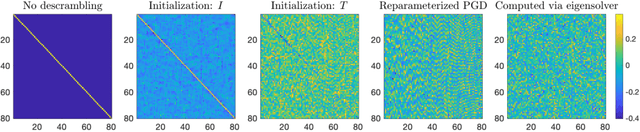

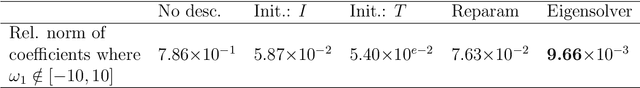

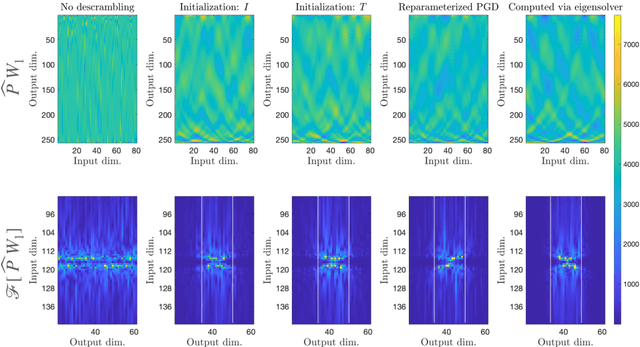

We demonstrate the emergence of weight matrix singular value decomposition (SVD) in interpreting neural networks (NNs) for parameter estimation from noisy signals. The SVD appears naturally as a consequence of initial application of a descrambling transform - a recently-developed technique for addressing interpretability in NNs \cite{amey2021neural}. We find that within the class of noisy parameter estimation problems, the SVD may be the means by which networks memorize the signal model. We substantiate our theoretical findings with empirical evidence from both linear and non-linear settings. Our results also illuminate the connections between a mathematical theory of semantic development \cite{saxe2019mathematical} and neural network interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge