Emergence of Selective Invariance in Hierarchical Feed Forward Networks

Paper and Code

Jan 30, 2017

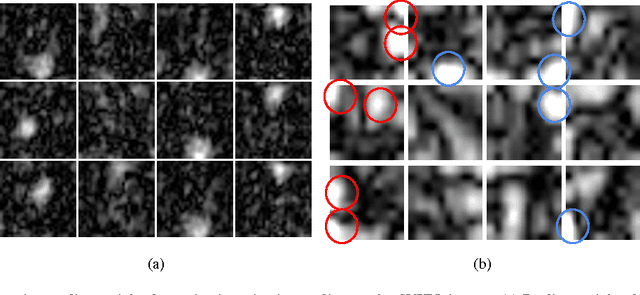

Many theories have emerged which investigate how in- variance is generated in hierarchical networks through sim- ple schemes such as max and mean pooling. The restriction to max/mean pooling in theoretical and empirical studies has diverted attention away from a more general way of generating invariance to nuisance transformations. We con- jecture that hierarchically building selective invariance (i.e. carefully choosing the range of the transformation to be in- variant to at each layer of a hierarchical network) is im- portant for pattern recognition. We utilize a novel pooling layer called adaptive pooling to find linear pooling weights within networks. These networks with the learnt pooling weights have performances on object categorization tasks that are comparable to max/mean pooling networks. In- terestingly, adaptive pooling can converge to mean pooling (when initialized with random pooling weights), find more general linear pooling schemes or even decide not to pool at all. We illustrate the general notion of selective invari- ance through object categorization experiments on large- scale datasets such as SVHN and ILSVRC 2012.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge