Efficient Video Understanding via Layered Multi Frame-Rate Analysis

Paper and Code

Nov 24, 2018

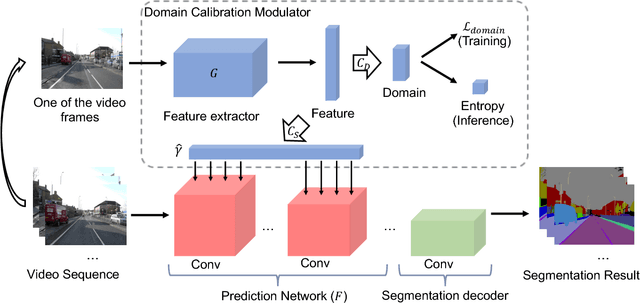

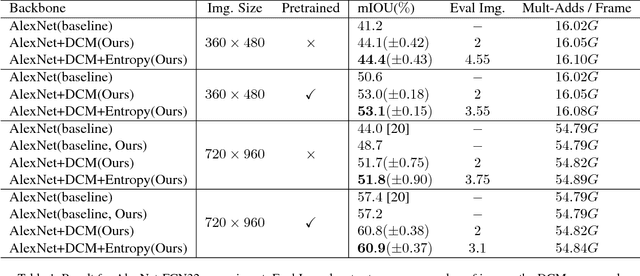

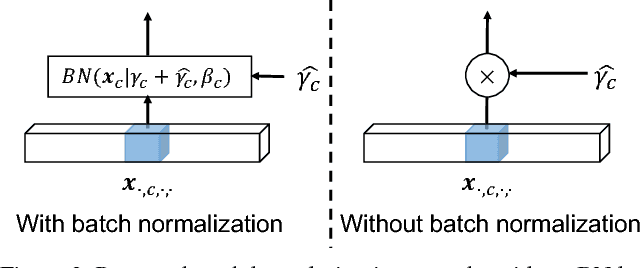

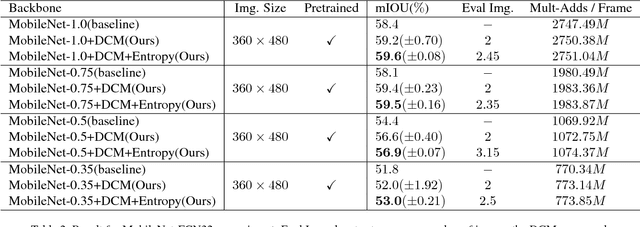

One of the greatest challenges in the design of a real-time perception system for autonomous driving vehicles and drones is the conflicting requirement of safety (high prediction accuracy) and efficiency. Traditional approaches use a single frame rate for the entire system. Motivated by the observation that the lack of robustness against environmental factors is the major weakness of compact ConvNet architectures, we propose a dual frame-rate system that brings in the best of both worlds: A modulator stream that executes an expensive models robust to environmental factors at a low frame rate to extract slowly changing features describing the environment, and a prediction stream that executes a light-weight model at real-time to extract transient signals that describes particularities of the current frame. The advantage of our design is validated by our extensive empirical study, showing that our solution leads to consistent improvements using a variety of backbone architecture choice and input resolutions. These findings suggest multiple frame-rate systems as a promising direction in designing efficient perception for autonomous agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge