Efficient and Interpretable Robot Manipulation with Graph Neural Networks

Paper and Code

Feb 25, 2021

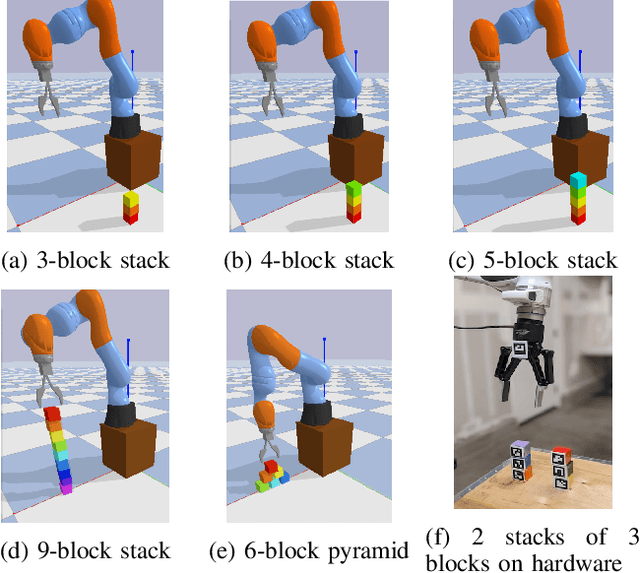

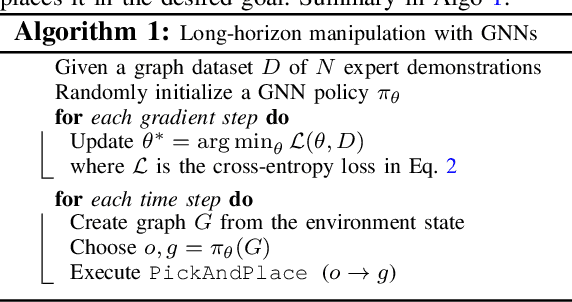

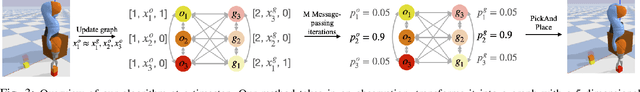

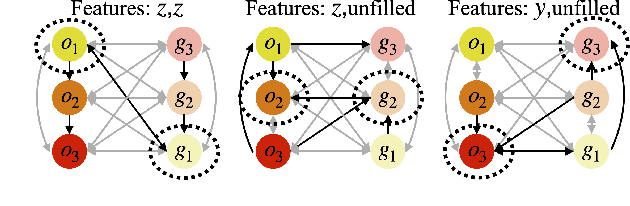

Many manipulation tasks can be naturally cast as a sequence of spatial relationships and constraints between objects. We aim to discover and scale these task-specific spatial relationships by representing manipulation tasks as operations over graphs. To do this, we pose manipulating a large, variable number of objects as a probabilistic classification problem over actions, objects and goals, learned using graph neural networks (GNNs). Our formulation first transforms the environment into a graph representation, then applies a trained GNN policy to predict which object to manipulate towards which goal state. Our GNN policies are trained using very few expert demonstrations on simple tasks, and exhibits generalization over number and configurations of objects in the environment and even to new, more complex tasks, and provide interpretable explanations for their decision-making. We present experiments which show that a single learned GNN policy can solve a variety of blockstacking tasks in both simulation and real hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge