Effective Multi-User Delay-Constrained Scheduling with Deep Recurrent Reinforcement Learning

Paper and Code

Aug 30, 2022

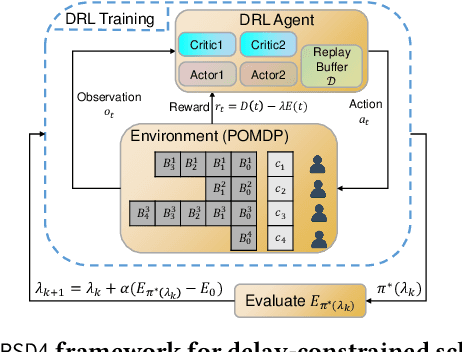

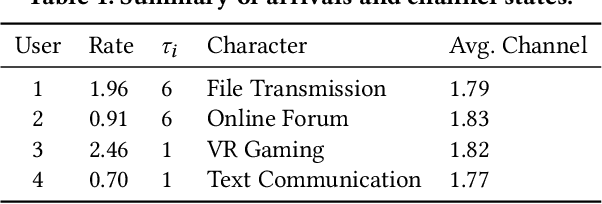

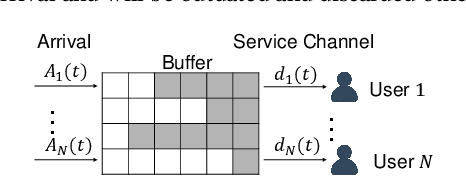

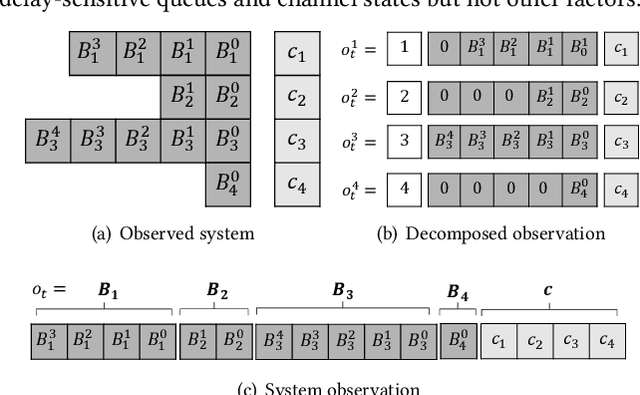

Multi-user delay constrained scheduling is important in many real-world applications including wireless communication, live streaming, and cloud computing. Yet, it poses a critical challenge since the scheduler needs to make real-time decisions to guarantee the delay and resource constraints simultaneously without prior information of system dynamics, which can be time-varying and hard to estimate. Moreover, many practical scenarios suffer from partial observability issues, e.g., due to sensing noise or hidden correlation. To tackle these challenges, we propose a deep reinforcement learning (DRL) algorithm, named Recurrent Softmax Delayed Deep Double Deterministic Policy Gradient ($\mathtt{RSD4}$), which is a data-driven method based on a Partially Observed Markov Decision Process (POMDP) formulation. $\mathtt{RSD4}$ guarantees resource and delay constraints by Lagrangian dual and delay-sensitive queues, respectively. It also efficiently tackles partial observability with a memory mechanism enabled by the recurrent neural network (RNN) and introduces user-level decomposition and node-level merging to ensure scalability. Extensive experiments on simulated/real-world datasets demonstrate that $\mathtt{RSD4}$ is robust to system dynamics and partially observable environments, and achieves superior performances over existing DRL and non-DRL-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge