EEGminer: Discovering Interpretable Features of Brain Activity with Learnable Filters

Paper and Code

Oct 19, 2021

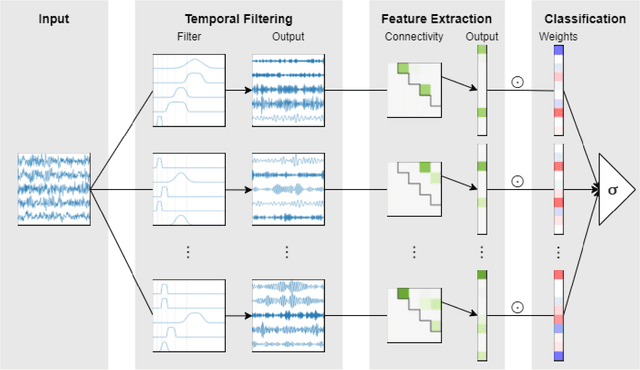

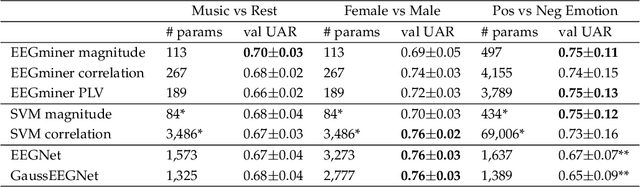

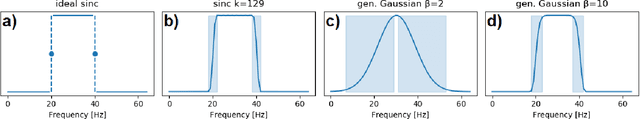

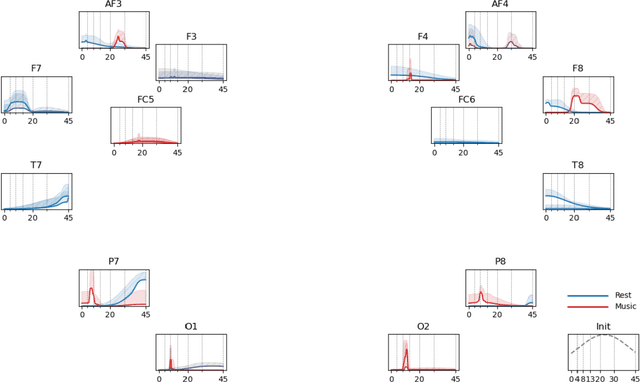

Patterns of brain activity are associated with different brain processes and can be used to identify different brain states and make behavioral predictions. However, the relevant features are not readily apparent and accessible. To mine informative latent representations from multichannel EEG recordings, we propose a novel differentiable EEG decoding pipeline consisting of learnable filters and a pre-determined feature extraction module. Specifically, we introduce filters parameterized by generalized Gaussian functions that offer a smooth derivative for stable end-to-end model training and allow for learning interpretable features. For the feature module, we use signal magnitude and functional connectivity. We demonstrate the utility of our model towards emotion recognition from EEG signals on the SEED dataset, as well as on a new EEG dataset of unprecedented size (i.e., 763 subjects), where we identify consistent trends of music perception and related individual differences. The discovered features align with previous neuroscience studies and offer new insights, such as marked differences in the functional connectivity profile between left and right temporal areas during music listening. This agrees with the respective specialisation of the temporal lobes regarding music perception proposed in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge