Edge-aware Plug-and-play Scheme for Semantic Segmentation

Paper and Code

Mar 18, 2023

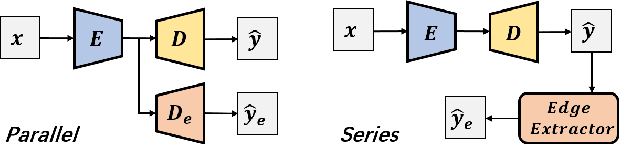

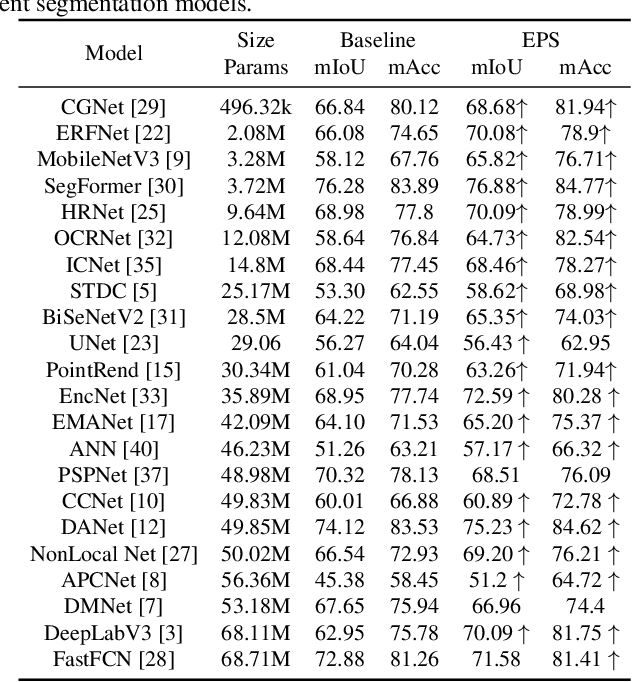

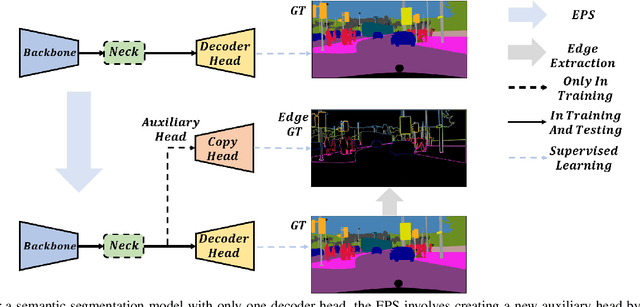

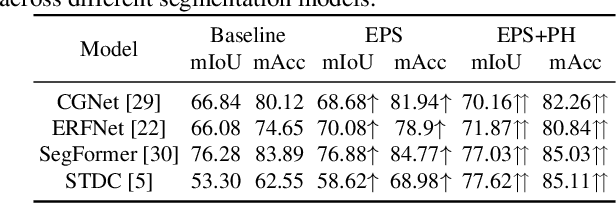

Semantic segmentation is a classic and fundamental computer vision problem dedicated to assigning each pixel with its corresponding class. Some recent methods introduce edge-based information for improving the segmentation performance. However these methods are specific and limited to certain network architectures, and they can not be transferred to other models or tasks. Therefore, we propose an abstract and universal edge supervision method called Edge-aware Plug-and-play Scheme (EPS), which can be easily and quickly applied to any semantic segmentation models. The core is edge-width/thickness preserving guided for semantic segmentation. The EPS first extracts the Edge Ground Truth (Edge GT) with a predefined edge thickness from the training data; and then for any network architecture, it directly copies the decoder head for the auxiliary task with the Edge GT supervision. To ensure the edge thickness preserving consistantly, we design a new boundarybased loss, called Polar Hausdorff (PH) Loss, for the auxiliary supervision. We verify the effectiveness of our EPS on the Cityscapes dataset using 22 models. The experimental results indicate that the proposed method can be seamlessly integrated into any state-of-the-art (SOTA) models with zero modification, resulting in promising enhancement of the segmentation performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge