EAGAN: Efficient Two-stage Evolutionary Architecture Search for GANs

Paper and Code

Nov 30, 2021

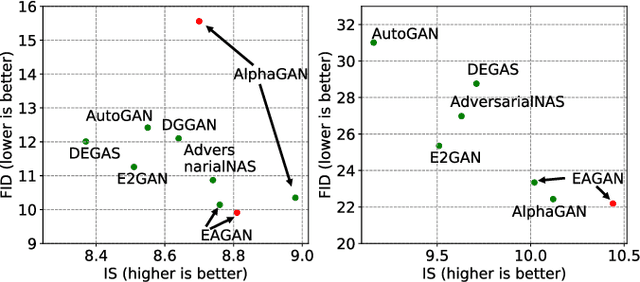

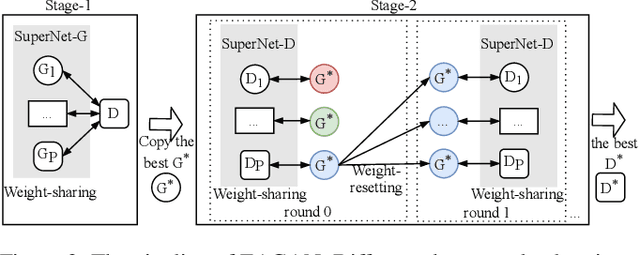

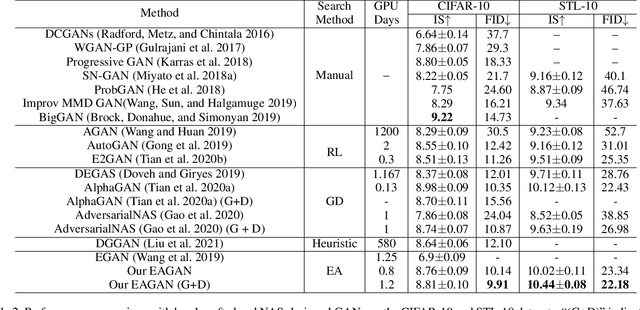

Generative Adversarial Networks (GANs) have been proven hugely successful in image generation tasks, but GAN training has the problem of instability. Many works have improved the stability of GAN training by manually modifying the GAN architecture, which requires human expertise and extensive trial-and-error. Thus, neural architecture search (NAS), which aims to automate the model design, has been applied to search GANs on the task of unconditional image generation. The early NAS-GAN works only search generators for reducing the difficulty. Some recent works have attempted to search both generator (G) and discriminator (D) to improve GAN performance, but they still suffer from the instability of GAN training during the search. To alleviate the instability issue, we propose an efficient two-stage evolutionary algorithm (EA) based NAS framework to discover GANs, dubbed \textbf{EAGAN}. Specifically, we decouple the search of G and D into two stages and propose the weight-resetting strategy to improve the stability of GAN training. Besides, we perform evolution operations to produce the Pareto-front architectures based on multiple objectives, resulting in a superior combination of G and D. By leveraging the weight-sharing strategy and low-fidelity evaluation, EAGAN can significantly shorten the search time. EAGAN achieves highly competitive results on the CIFAR-10 (IS=8.81$\pm$0.10, FID=9.91) and surpasses previous NAS-searched GANs on the STL-10 dataset (IS=10.44$\pm$0.087, FID=22.18).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge